With so much content flooding your audience’s feed, creating ads that stand out is harder than ever. So, how do you know if your ads are truly connecting?

Testing.

Ad creative testing is your secret weapon to uncover what works: the headline, the image, or the call to action. It’s about experimenting, learning fast, and doubling down on what drives results. This article shares 8 actionable tips to help you test smarter and boost engagement, so your campaigns deliver the impact you need.

Why Ad Creative Testing Matters

Ad creative testing hits your bottom line in ways that go far beyond prettier ads. When done right, it delivers measurable results across key metrics:

- Improved ROAS and ROI: Testing visuals, headlines, and messages reveals what resonates with your audience, helping you maximize ad spend. For example, Coca-Cola boosted ROI by experimenting with creatives.

- Reduced ad fatigue: Regular testing helps identify when content needs refreshing, preventing performance dips and wasted spend.

- Data-driven insights: Learn why certain creatives work with specific audiences, shaping your broader marketing strategy based on actual behavior.

- Better budget allocation: Shift spend to high-performing ads by identifying which creative elements drive results, from headlines to CTAs.

- Competitive advantage: Stay ahead in crowded markets by continuously refining your campaigns, ensuring they remain fresh and responsive to changes.

Tip 1: Formulate a Clear Hypothesis

A hypothesis is the foundation of effective ad testing. It’s a specific, testable prediction about how a change will impact performance. Rather than random experimentation, a clear hypothesis gives structure and measurable goals to your tests.

A strong hypothesis connects testing to business objectives and helps you understand why something worked, not just what happened. This turns testing from trial and error into a strategic, data-driven process.

Examples of strong hypotheses:

- “Customer testimonials will increase conversion rates by 10%.”

- “Video ads will drive more engagement than static images.”

- “Emotional messaging will outperform feature-focused copy.”

- “Upfront pricing information will reduce bounce rates by 15%.”

Each hypothesis specifies the change and expected outcome, making success measurable.

Use the SMART framework (Specific, Measurable, Achievable, Relevant, Time-bound) to ensure clarity. For example, instead of "changing button color will improve performance," say, "changing the button color from blue to red will increase CTR by 5% within two weeks."

SAN's case study showed how AI-powered testing identified winning elements across 130+ dimensions, revealing that successful ads featured strong emotional storytelling and integrated branding.

Documenting hypotheses and results builds a knowledge base, helping you avoid past mistakes and optimize for what works.

Tip 2: Test One Variable at a Time

The scientific method offers the clearest path to useful insights in ad creative testing. You establish direct connections between specific changes and performance improvements when you isolate variables.

Testing multiple variables at once creates confusion about what actually drove results.

Focus on testing these isolated variables one at a time:

- Headlines and copy elements - Different messaging approaches, value propositions, or tones

- Visuals - Images versus videos, or different visual styles

- CTA buttons - Variations in text phrasing, color, or placement

- Ad formats - Carousel ads against collection ads or other format options

Understand testing methodologies: A/B testing changes just one element while keeping everything else identical, giving you clear insights about that specific variable. Multivariate testing examines how different combinations work together but requires more complex analysis. New to ad creative testing? Start with simple A/B tests for quick wins, then advance to more complex methods as your testing confidence grows.

Tip 3: Utilize A/B and Multivariate Testing Methods

This straightforward approach splits your audience between two ad variations with just one difference, pinpointing exactly what drives better performance.

Here's how to do it: create two versions of your ad, show each to similar audience segments, and measure which performs better. This works exceptionally well for testing:

- Headlines and copy variations

- Different images or videos

- CTA button text or placement

- Color schemes or layouts

Underwear brand Underoutfit proved A/B testing's power when comparing user-generated content against standard branded Facebook ads. The results? 47% higher click-through rates, 31% lower cost per sale, and 38% higher return on ad spend.

Need to test multiple elements at once? Multivariate examines how different combinations of variables work together — perfect for complex scenarios like landing pages where many elements interact.

Keep in mind that multivariate tests need larger sample sizes and often bigger budgets since you're testing numerous combinations simultaneously.

For advanced ad creative testing, consider:

- Conversion lift testing: Compares test and control groups to measure your creative changes' true impact

- Bayesian testing: Uses statistical models to reach conclusions faster, often with smaller sample sizes

Choose your testing method based on campaign goals, audience size, budget, and timeline. Start with simple A/B tests for quick wins, then advance to more complex methods as your testing confidence grows.

Tip 4: Allocate an Adequate Budget for Ad Creative Testing

Many marketers underestimate the resources needed for testing, leading to inconclusive results that waste time and money.

Allocate 20–30% of your total ad spend for creative testing. While it may seem high, this investment typically results in significantly better campaign performance.

Consider budget floors, the minimum thresholds needed to generate reliable results. Proper bid management ensures your tests gather enough data for meaningful conclusions, avoiding inaccurate results.

Without a sufficient budget, you risk discarding effective creatives due to a lack of exposure. A smart budget approach includes:

- Small budgets for initial testing

- Increased spend on promising concepts

- Larger investments for proven winners

This structure balances exploration (testing new ideas) and exploitation (scaling successful elements), maximizing returns from what works.

For example, Samsung tested 400+ ad variations and saw a 173% increase in ROAS, identifying the best-performing creatives for different audiences.

Your testing budget is an investment that often delivers far more in return.

Tip 5: Develop a Structured Ad Creative Testing Roadmap

A well-designed blueprint helps your ad creative testing efforts deliver actionable insights rather than scattered data points.

Build a Prioritization Framework

Start by developing a framework to decide which tests deserve priority:

- Impact potential: How much could this test improve performance?

- Resource requirements: What's the cost in time, budget, and creative resources?

- Implementation difficulty: How hard will it be to set up and analyze?

This approach focuses you on high-impact, low-effort tests first, maximizing return on your testing investment.

Create a Strategic Testing Sequence

Follow a logical progression rather than testing random elements:

- Core messaging and value propositions - Test different ways to communicate your fundamental value

- Visual elements - Test variations in images, videos, colors, and layouts

- CTAs and conversion elements - Experiment with button text, placement, and design

- Ad formats and placements - Test performance across different formats and placements

Document Everything

Keep a comprehensive testing log including:

- Test hypotheses

- Variables tested

- Start and end dates

- Results and key metrics

- Insights gained

- Recommendations for future tests

This documentation builds knowledge over time, preventing repeated tests and revealing patterns across experiments.

Develop Decision Trees

Create predefined paths for next steps based on results:

- If variation A outperforms by >15%, implement immediately and test refinements

- If results show <5% difference, test more dramatic variations

- If both variations underperform the control, revisit your hypothesis

A structured ad creative testing roadmap transforms random testing into a strategic process that consistently improves performance and builds on previous learnings.

Tip 6: Monitor Relevant Metrics

The metrics you focus on should match your campaign goals and your audience's position in the marketing funnel.

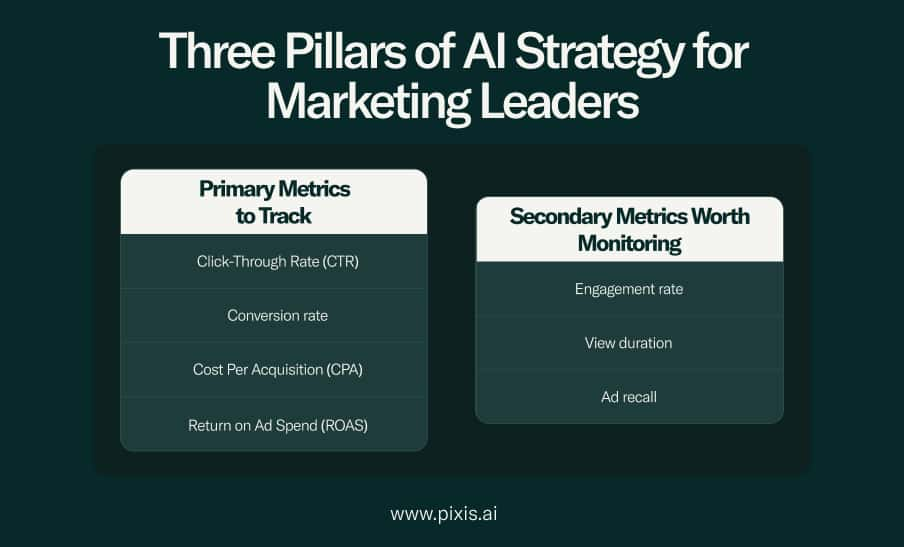

Primary Metrics to Track

- Click-Through Rate (CTR)

- Conversion rate

- Cost Per Acquisition (CPA)

- Return on Ad Spend (ROAS)

Secondary Metrics Worth Monitoring

- Engagement rate

- View duration

- Ad recall

Choosing the Right Metrics

Select metrics based on your goals. For awareness campaigns, prioritize impressions, reach, and ad recall. For conversion-focused campaigns, emphasize conversion rate, CPA, and ROAS.

Be wary of vanity metrics that look impressive but don't connect to business objectives. High engagement means little if it doesn't translate to meaningful outcomes.

The most relevant metrics vary depending on which funnel stage you're targeting — early-funnel campaigns might prioritize awareness metrics, while bottom-funnel efforts focus on conversion and revenue.

Tip 7: Be Patient and Allow Time for Results

Rushing to conclusions without enough data is a common mistake in testing. Without statistical significance, your results are just guesswork, and acting on incomplete data can be costly.

Statistical significance means you can confidently say your results reflect actual audience preferences, not random chance. It’s the difference between knowing your creative works and just hoping it does.

To ensure reliable results, follow these guidelines:

- Collect enough impressions: Aim for 3,000–5,000 impressions per variant for statistical significance. For lower-traffic campaigns or those with low conversions, you’ll need more.

- Run tests long enough: Most tests should last at least 1–2 weeks, even with high traffic, to account for day-of-week variations.

- Account for external factors: Traffic, conversion rates, and seasonal trends affect how long your test should run. During holidays or sales, user behavior shifts, so adjust your testing schedule.

Avoid ending tests prematurely, as this can lead to false results. A test should conclude only when it reaches statistical significance or hits a set end date without conclusive results.

Patience is a competitive advantage. While others rush to judgment, your commitment to thorough testing will provide more accurate insights and better performance.

Tip 8: Regularly Refresh Ad Creatives Through Testing

One of the most powerful yet underused strategies in ad optimization is creative refreshing. Ad fatigue is real. When your audience sees the same ads repeatedly, engagement drops and acquisition costs climb.

Here's how to add an effective refresh strategy:

Identifying Creative Fatigue

Watch for these warning signs:

- Declining click-through rates over time

- Rising cost-per-click or cost-per-acquisition

- Decreased engagement (likes, shares, comments)

- Reduced conversion rates despite consistent traffic

Platform-Specific Refresh Frequencies

Different platforms have different optimal schedules:

- High-volume platforms (Facebook, Instagram, TikTok): Every 2-4 weeks

- Search ads (Google, Bing): Every 4-6 weeks

- Display networks: Every 3-5 weeks

- LinkedIn and B2B platforms: Every 6-8 weeks

Systematic Approach to Refreshing in Ad Creative Testing

Take a methodical approach rather than completely overhauling your ads:

- A/B test individual elements before full rollout

- Refresh visual elements first, as these typically fatigue fastest

- Next, update headlines and copy points

- Finally, experiment with new CTAs and offer positioning

Create a content calendar specifically for ad refreshes, treating them as a core part of campaign management rather than a reactive measure when performance drops.

Organizations that embrace continuous ad creative testing gain significant advantages. Facebook's partnership with Toluna demonstrated this through meta-analysis of creative tests, achieving a 6.5-point recall lift by systematically optimizing variables like ad length and captions.

Implement Ad Creative Testing to Keep Your Campaigns on Track

Effective ad creative testing is essential for staying ahead in today’s competitive landscape. By testing with purpose, patience, and a strategic approach, you can uncover insights that drive better engagement, higher ROAS, and more impactful campaigns. Remember, the key is to test consistently, analyze rigorously, and optimize continuously. If you're looking to supercharge your testing process and gain deeper, data-driven insights with ease, platforms like Pixis can help you automate and scale your ad creative testing, allowing you to focus on what truly matters: delivering results that resonate with your audience.