ChatGPT vs Prism: What Happened When I Ran the Same Meta Ads Request Through Both

I recently ran a pretty simple experiment.

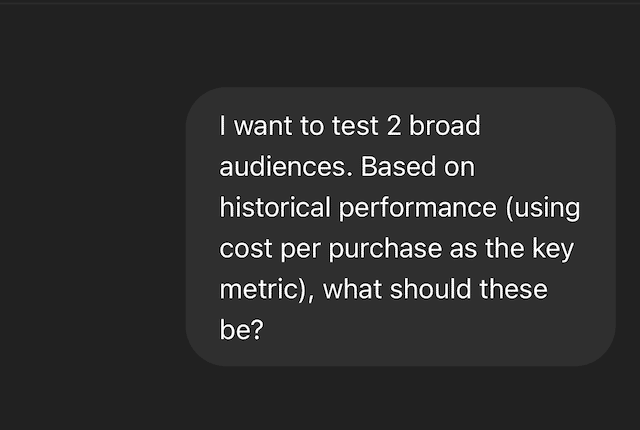

I wanted to define two broad audiences to test on Meta Ads — nothing fancy, just something grounded in actual performance history, with cost per purchase as the only metric that mattered.

So I posed the same question to two tools:

- ChatGPT (I had to build a Meta Ads report and give it the csv it needed)

- Prism, our own specialist AI built for performance marketers.

The idea of this test was twofold:

- How much work is it to give ChatGPT the information it needs to even answer my questions?

- How well does ChatGPT perform against our own performance marketing AI when I do give it the data it needs?

Here’s what happened — and why only one of them gave me something I could actually use.

ChatGPT: The Long Way Around

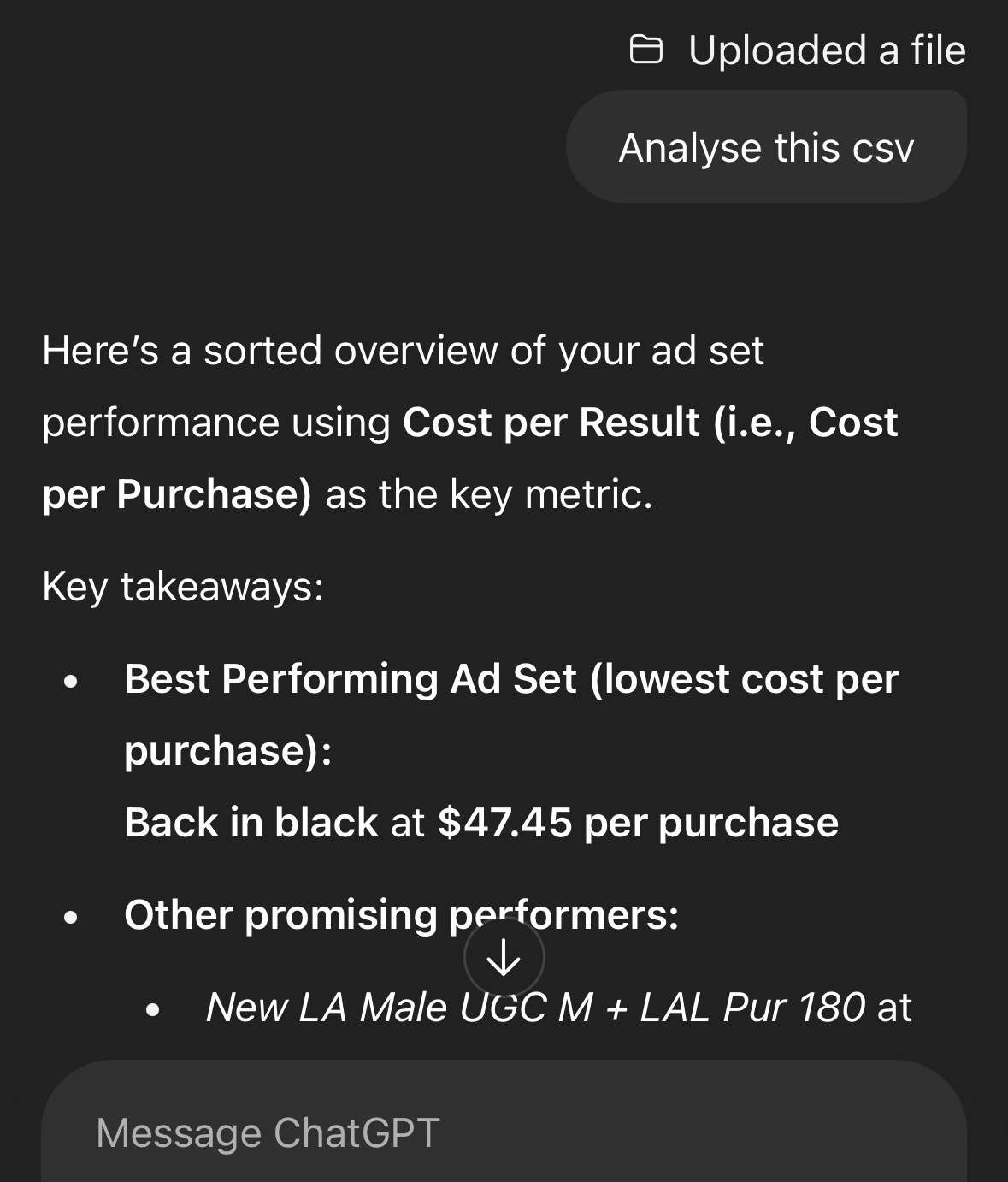

First, I exported my Meta Ads performance report — a standard CSV with cost-per-purchase breakdowns across ad sets.

Then I asked ChatGPT this:

Pretty direct, right?

What It Did

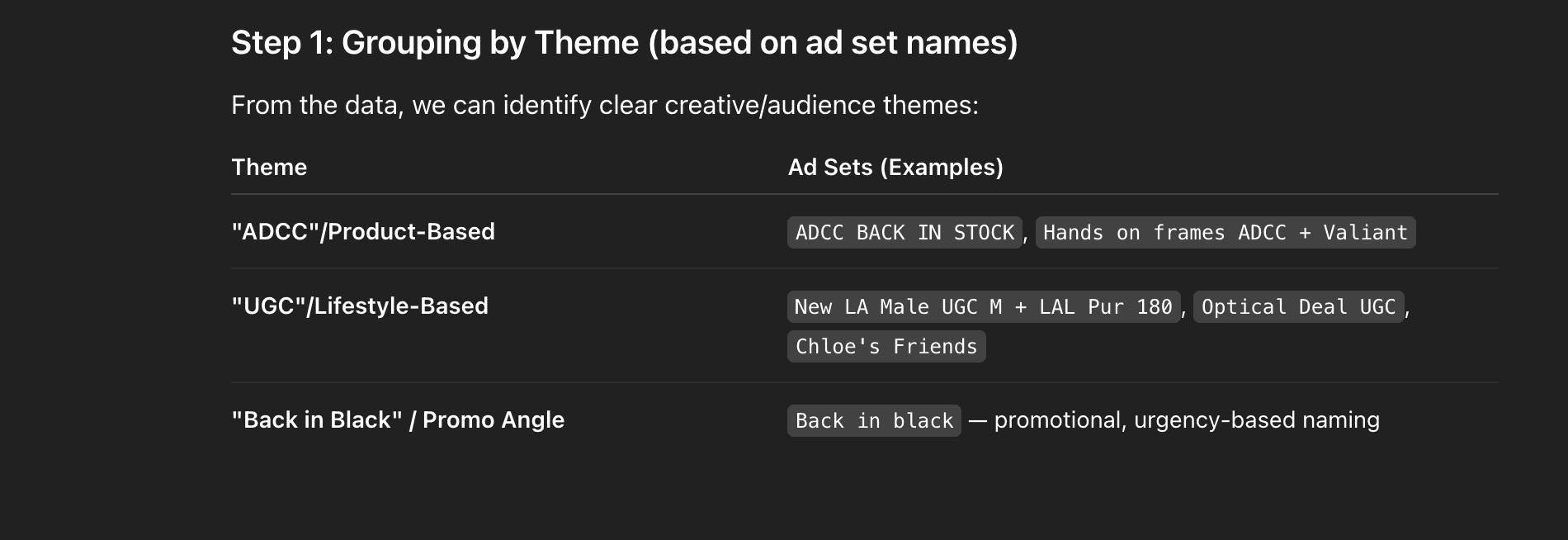

ChatGPT scanned the CSV and — credit where it’s due — did something. It grouped my campaigns by theme.

Themes included:

- Promo-based offers

- UGC / lifestyle content

- Product-focused ads (flagged as high-cost)

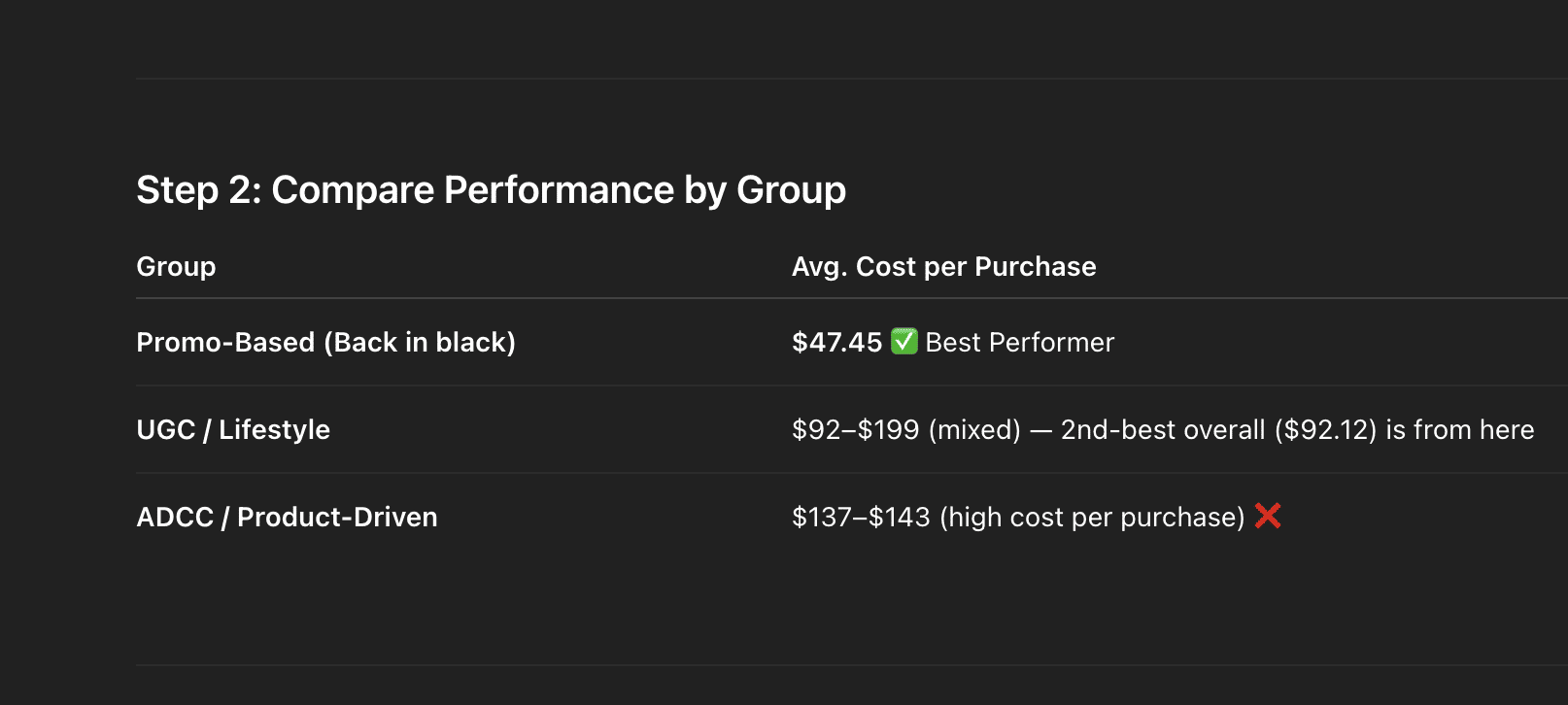

And then it gave me this:

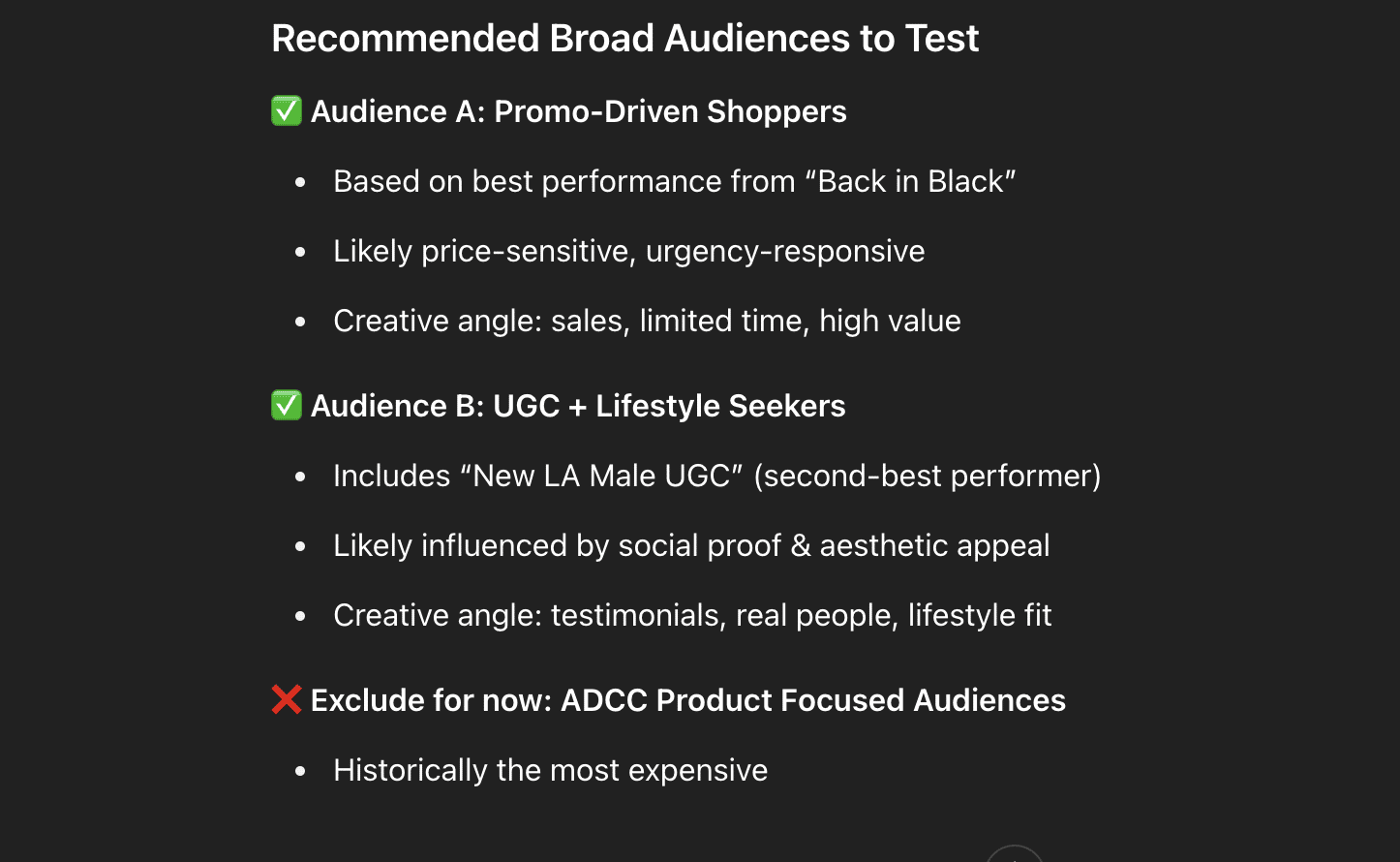

- ✅ Promo-driven shoppers

- ✅ UGC & lifestyle seekers

- ❌ Product-focused audiences (too expensive)

Here’s the catch:

These weren’t audiences.

They were creative buckets.

If I wanted to use this, I still had to:

- Map each theme to actual audience targeting in Meta

- Manually rebuild each test

- Hope I got the logic right

So while ChatGPT technically answered my question, it felt like a really smart intern handing me a half-finished draft.

Prism: The Shortcut

With Prism, I didn’t upload a CSV. I didn’t need a multi-step prompt stack.

I just asked:

And Prism got to work.

What It Did

It ran real-time breakdowns across:

- Age

- Gender

- Country

- Device

- Platform

All without me asking for each. No files uploaded. No nudging required.

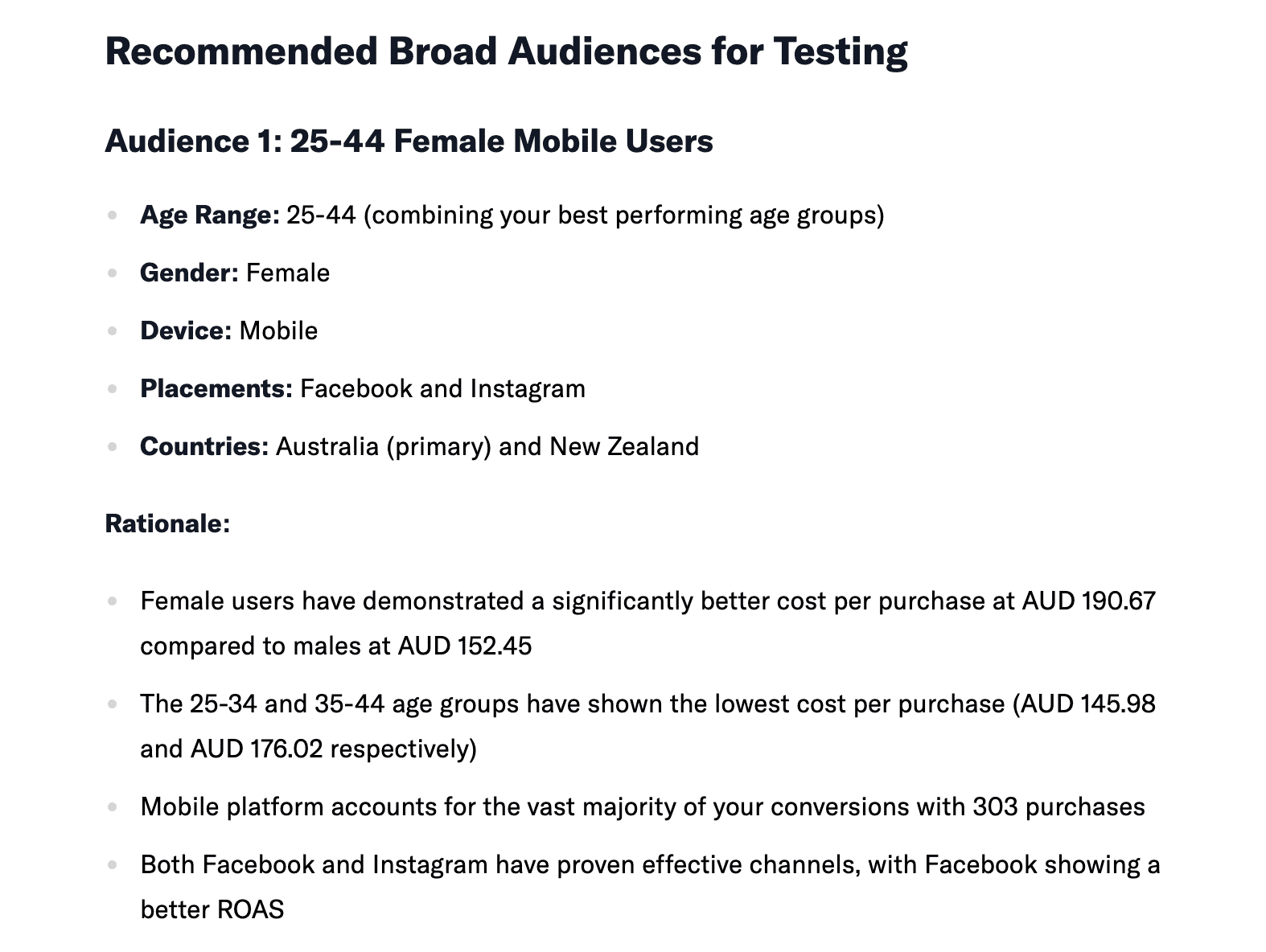

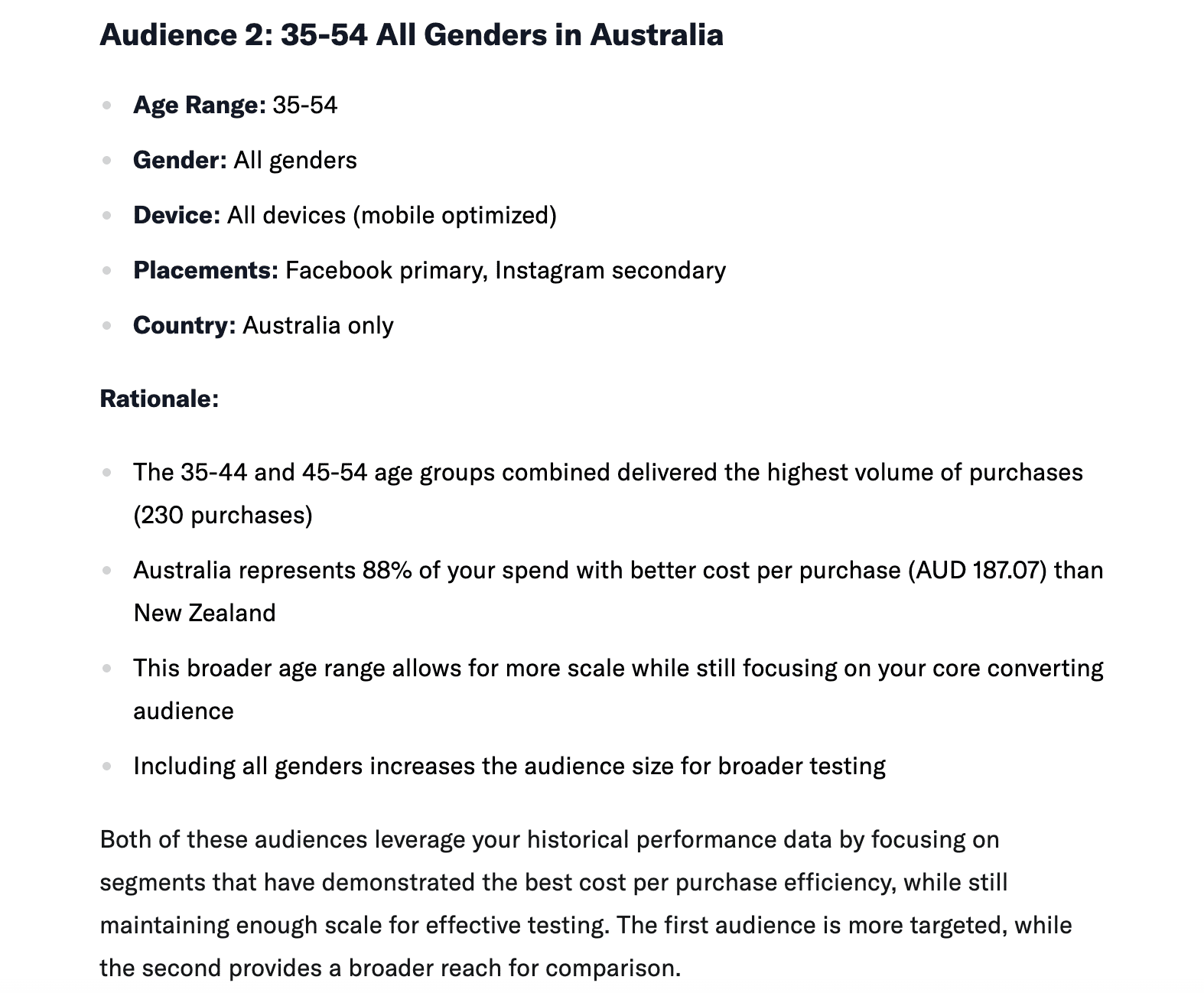

Then it gave me these:

And Prism didn’t stop at just giving me these segments — it told me why they performed and why they were worth testing.

The Real Difference

ChatGPT gave me ostensibly smart ideas that I’d have to test to figure out if they were actually smart. I didn’t give me great reasoning that showed me it really understood my ask, either. And also… not audiences.

Prism gave me a usable answer.

One felt like a brainstorm.

The other felt like a brief.

Takeaway

Specialist AI models work better for specialist tasks.

And by the way, we didn’t even talk about the effort it took just to get ChatGPT the meta ads report it needed to do the analysis. It wasn’t easy or fun. It felt like wasted time, actually.

But once you’ve got an LLM with the data it should need to give you a good answer, then you ask:

“Who should I target based on actual results?”

You don’t want frameworks. You want targets.

That’s the power of a specialist AI like Prism. It understands what “audience testing” actually means to a performance marketer. It pulls the relevant levers, reads real data, and gives you back exactly what you asked for — audiences, not abstractions.

And that’s why I trust it over a general-purpose LLM when my ROAS is on the line.