Why is Context Engineering the “next big thing”?

Pretty much all performance marketers are now experimenting with AI, but the early thrill of writing ‘clever’ prompts like “write me 10 headlines for my new campaign” is fading.

If you’re like me, you might have realized that even our best wordsmithing often leads to outputs that feel generic, off-brand, or disconnected from the realities of campaign performance.

And that’s not the LLM’s fault. The root of the problem is that AI doesn’t know your business unless you teach it.

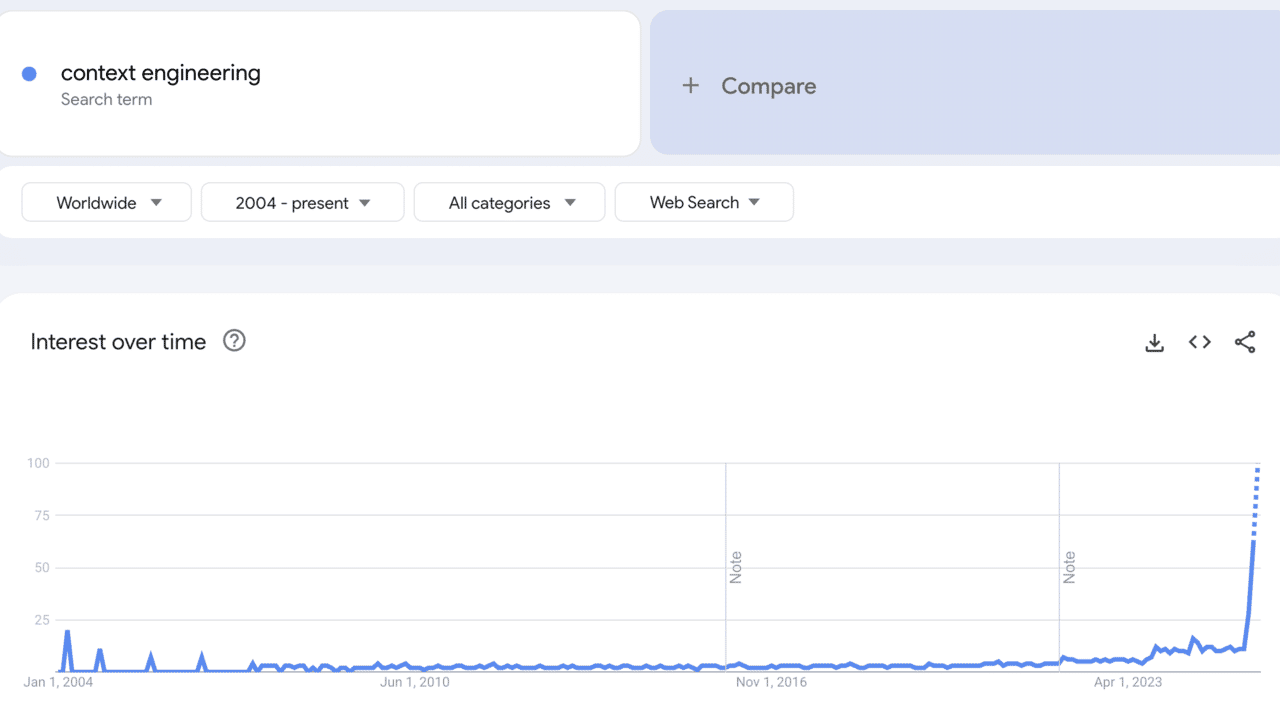

Which is why context engineering has become the new hot AI topic of discussion.

Instead of relying on prompts alone, you can use context engineering to surround the model with the right data, formatted in the right way, at the right time, so it actually has what it needs to give us great responses. As Gartner’s Avivah Litan has noted, generative AI only becomes truly valuable in production when paired with trustworthy context.

The “garbage-in, garbage-out” saying is a little trite. But in my real-world work running performance marketing campaigns with my large CPG customers, I’m seeing it come true.

After a certain point, there are diminishing returns in crafting better prompts. Instead, I’m starting to put more effort into curating the context our AI needs to deliver the kinds of responses I’d expect from an experienced colleague.

Context engineering is a somewhat intimidating-sounding concept.

I’ve done countless tests and spent hours and hours with AI engineers building Prism, our own performance marketing AI, so I could learn everything a performance marketer should know about context engineering, and I’m here to share it all with you.

What’s the difference between prompt engineering and context engineering?

The simplest way to think about it:

Prompt engineering is about phrasing your request. Asking for the right thing, in the right way.

Context engineering is about framing your request with a rich world of relevant details the AI can use to give you a quality answer. Context engineering gives AI the right information, at the right time, in the right format.

Even with a great prompt, responses from LLM sometimes feel parroted or generic. Context is what sits below the surface: system instructions, chat history, brand data, tools, and goals. That’s what makes the model feel like a partner.

In short: prompts are part of context engineering, but context engineering is the bigger game.

When you enable an LLM with excellent context, prompting gets a lot easier, so you can ask things like this:

| Related: Basics of using LLMs for marketing

How prompt and response quality changes with context engineering

When you don’t provide context, prompts tend to be bloated. You cram in instructions and hope the AI guesses your intent. With context engineering, your prompts can be simple, natural, and still deliver high-quality output.

Here’s an example.

Prompt Without context engineering:

Role: You are an expert digital copywriter specializing in Facebook Ads for consumer packaged goods.

Task: Our brand. BrightBite, is launching a new protein bar. You must write five ad copy headlines for Facebook that are clear, persuasive, and tailored to resonate with health-conscious consumers aged 18-36, looking for tasty, nutritious, convenient snacks without any artificial ingredients.

Steps to take:

1. Generate 5 ad headlines.

2. Keep each headline under 30 characters.

3. Highlight key benefits: high protein, low sugar, convenient snack.

4. Use an energetic, approachable, and trustworthy tone.

5. Avoid jargon or generic claims.

Refer to well-known buyer psychology principles too guide your thinking.

Format the results as a numbered list. Justify your reasoning for each headline.

Double check your work before replying.

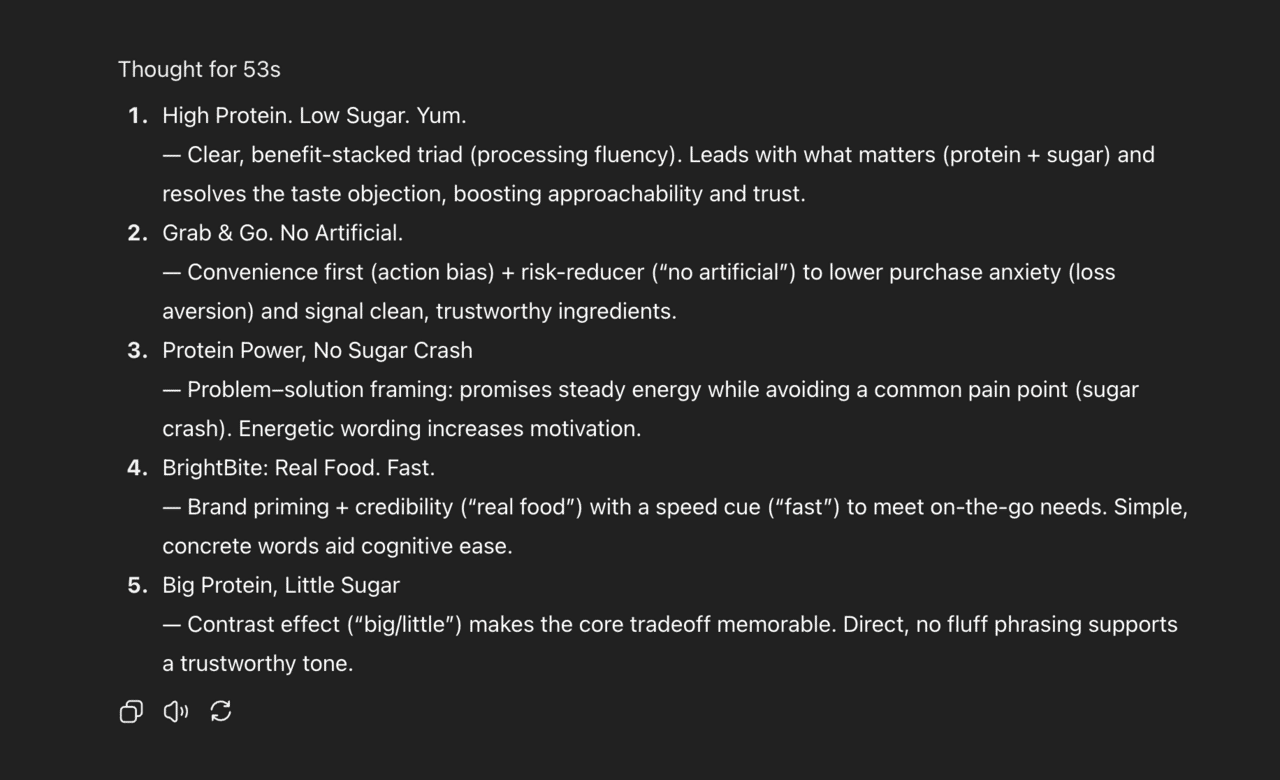

Response without context engineering:

I used that exact prompt with ChatGPT 5 and it’s… fine. It gives me a jumping off point and some ideas, but nothing about these headlines feel like the BrightBite brand.

With context:

Suggest 5 new Meta Ads headlines for the new BrightBite’s protein bar, using the BrightBite Style Guide, the Protein Bar creative brief, and past top-performing campaigns with health-conscious audience segments from the last three months.

This second prompt is shorter, but the outputs will be sharper, more relevant, and closer to publish-ready.

Response with context engineering:

I used Prism for this, because it’s already connected to the ad accounts it needs, and it shows.

Prism has context about past performance of different audience segments, creatives, products, and it knows our UVPs and overarching messaging and brand positioning.

Why?

Because behind the scenes you’ve already given the LLM direct access to your Meta Ads account performance data, it doesn’t need as much direction to decide what works - it can see what works.

Plus, the system prompt you gave it already set it up to act as an expert copywriter, and the examples of great Facebook ads and brand voice documents you gave it help it write headlines in line with your expectations so you don’t need to explain them each time you prompt.

Keep reading to find out how it all works, and how you can do easy context engineering yourself.

What do performance marketers actually need to know about how context engineering works?

If you run CPG campaigns on Meta or Google, you could use AI to optimize spend, come up with better creative, analyze competitors, plan different scenarios, or uncover new audiences. In each of these use-cases, your AI assistant will only be as good as the context you feed it.

People building advanced AI agents get really deep on data structures, vector databases - things I don’t understand, and performance marketers don’t even really need to know about.

Here’s what you do actually need to know:

There are different types of context your LLM needs to perform well

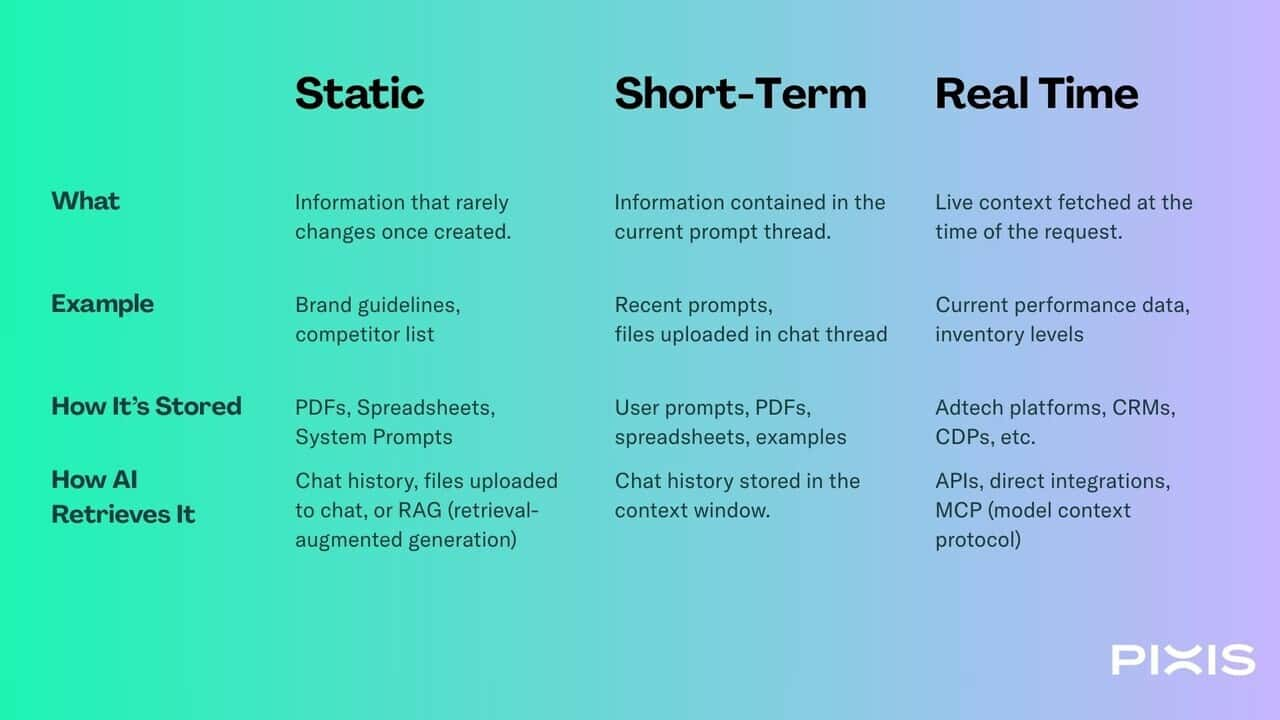

Broadly speaking, there are three types of context relevant to how most performance marketers will use AI.

Static Context

These things are important for your AI to know, and don’t frequently change.

Sources of important static context might include your brand guidelines, UVPs, a description of your ICP, a list of competitors, a list of your products, etc.

You can give an LLM the static context it needs about your business by keeping pdfs of those things handy and just uploading them with each new prompt. Many systems also allow you to edit a system prompt (the background instructions for an LLM that are always the same, regardless of the prompt input by a user in each new session), or to attach a knowledge base of relevant files.

Short-Term Context

These are pieces of information relevant to the problem-at-hand. They’re not necessarily always the same, but don’t always need to be completely perfectly updated, either.

Short term context is usually provided in the form of the prompt itself, but you can also upload a relevant file with each new session or prompt.

Dynamic Context

This is the real-time information an LLM would need to do an excellent job responding to specific prompts about your current situation. Unfortunately, it’s also what performance marketers usually find is missing when they interact with an LLM.

To provide dynamic context, your LLM would need direct access to a live feed of data via API, RSS feed, or direct integration with the system of record.

AI can store and retrieve context in a few different ways

Just a few basic concepts to know here. And more importantly, how they can be relevant to most performance marketers.

Short-term Memory

Many LLMs now have short-term memory that can hold the most important information that persists across chat threads. This is why ChatGPT, for example, knows that I’m a performance marketer who used to work at Bacardi and now works at Pixis. And that I hate emdashes.

(Tip: if you want to see or edit what your LLM “knows” about you, just ask it to share its memory. It’s a great way to “tune” how it responds to you.)

RAG (Retrieval-Augemented Generation)

RAG is, as it suggests, a retrieval method. When you store data somewhere else, like in a spreadsheet, some LLMs and AI apps allow you to upload that document and call upon it when necessary.

Usually, the data stored in a RAG system is relatively static, or only periodically updated.

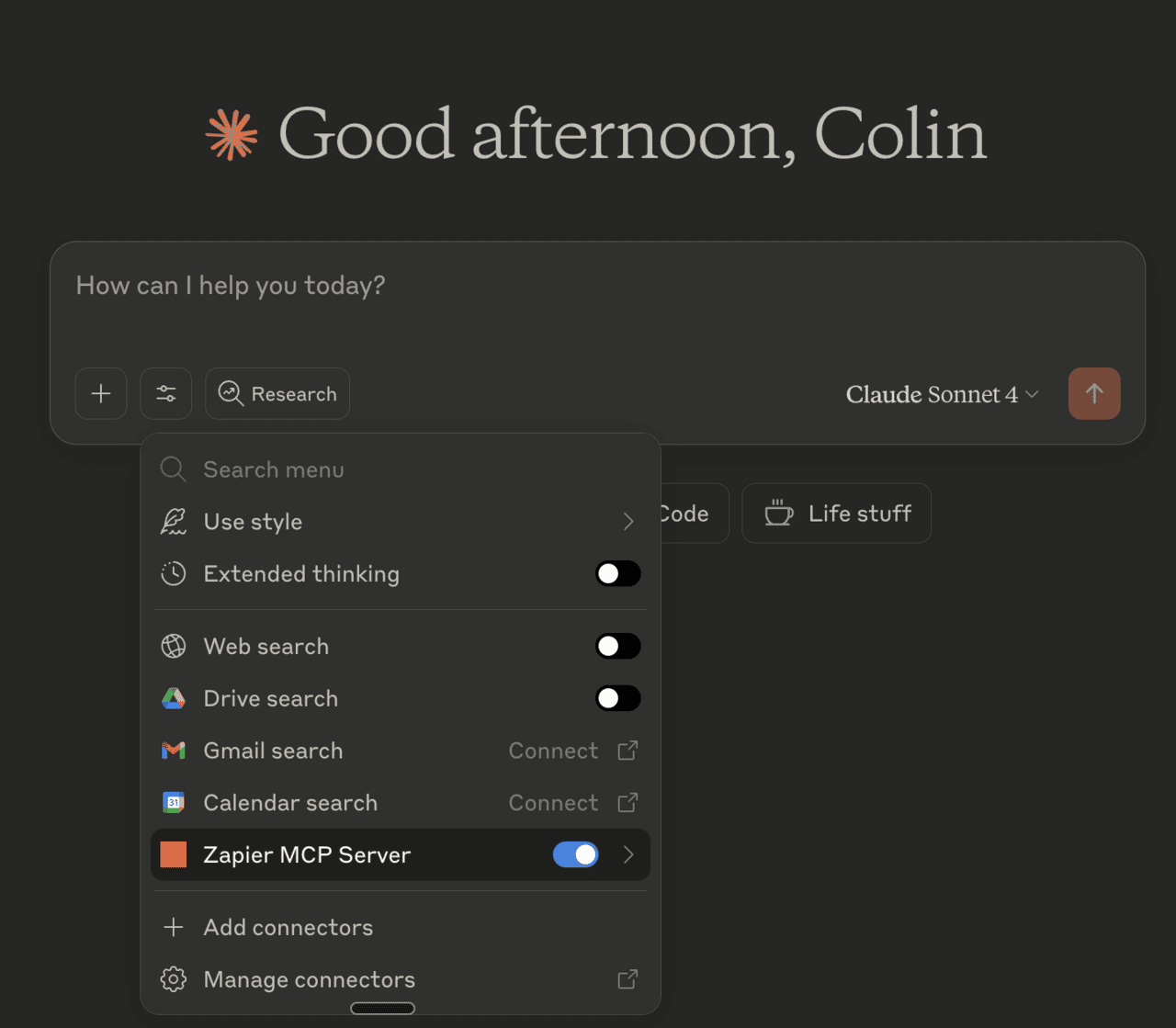

MCP (Model Context Protocol)

Model context protocol was developed by Anthropic and lets you connect LLMs to just about any other system so the LLM can access and understand other system’s data in real time.

(More about how MCP helps performance marketers here.)

Context Window

The context window contains everything the LLM is currently holding in its memory because you’re actively talking about it. Each session or chat thread with an LLM has its own context window. A context window persists for the duration of a single chat thread and includes:

- Your previous prompts

- Any files you’ve uploaded

- The AI’s responses in the thread so far

- Any information retrieved dynamically via API, RAG, MCP

- The system prompt

- Search results it retrieved for you

The way information is stored (context window vs separate database) doesn’t always map to the way you need your AI to retrieve it (file upload VS MCP). Here’s how to think about the times/ways to provide context to your LLM in more human terms:

What sources of context are most relevant in performance marketing?

Too much context can actually be just as bad as too little. Ever hear of choice paralysis? AI can suffer from something similar. Too much “context” can actually be noise. It can take practice to figure out which sources of data actually lead to an AI giving you mind-blowingly valuable responses, and which just lead to more profound mediocrity.

The data sources I’ve found most useful for context engineering for performance marketing use cases are:

- Ad accounts connections via MCP. Google Ads, Meta Ads, TikTok, etc let you give actual campaign data to your LLM in real time so it has access to spend, ROAS, CPA, CTR by audience, campaign, etc.

- Files & spreadsheets via file upload from Excel, Powerpoint or Google Sheets. Usually for one-off questions and guidance about work done outside of a system accessible by MCP. So analysis of a budget allocations plan, media plans, monthly performance report or creative brief, for example.

- Examples of winning creative via file upload. Past high-CTR ad copy, visuals, or subject lines.

- Brand rules and business goals stored in a simple pdf file and accessed by the LLM with RAG. Include voice, tone, compliance guidelines, revenue targets, and relevant preferences like channel priorities.

- Constraints via RAG or system prompt. Budget caps, audience exclusions, mandatory legal disclaimers.

How to do basic context engineering with tools you already have

Platforms like ChatGPT and Claude already include features that enable lightweight context engineering.

Here’s how to use them today:

Projects in both ChatGPT and Claude allow you to store your brand guidelines, audience personas, and campaign history once, then work off of that foundation of context for each new chat thread. It automatically loads those documents into the context window in the background when you submit your first prompt, without the need to re-attach the docs each time.

(Claude Projects have the added benefit of cross-team collaboration features, which is nice.)

Or just upload files like last quarter’s Meta and Google Ads reports or creative briefs. The AI can use them to ground its recommendations, and will remember them for the duration of the chat thread.

Custom GPTs sound intimidating but are extremely easy to create and there’s almost no risk to doing it wrong. They allow you to build a specialized version trained on your brand’s messaging and CPG audience insights. It’s kind of like a Project in that you can add files it should refer to, but also gives you the ability to edit the system prompt and set other things like how “creative” or “conservative” it should be.

Quick heads up on giving an LLM sensitive data: before uploading, strip out sensitive data (like customer PII) and use secure connections. Stay safe out there!

Context Engineering with MCP in Practice

Prism uses MCP to turn your live marketing data into decisions you can act on. Instead of uploading PDFs or waiting for end‑of‑month decks, Prism connects to your sources, assembles role‑aware context, and returns clear next steps you can approve. The goal is simple: less prompting, more performing.

You can also set up an MCP server with Zapier, connect it to data sources like Google Analytics or SEMRush, then drop that server’s URL into Claude as a connection.

Now, I’m sure they’re still working on this, but I’ve tested it and was underwhelmed. My takeaway was that it was worth trying to see how MCP worked, and especially because it was free. But we’ve learned through practice that each MCP server needs to be carefully built to work well in specific contexts.

So I’m biased, but I prefer ours, because it actually works.

How Prism uses MCP

Live connections

Prism connects directly to your most important ad platforms, so performance data is at the ready. It knows how to pull the right reports all on its own to assemble the raw data it needs to perform tasks you assign it.

Role‑aware context assembly

The same question looks different for a CMO, CFO, and Media Buyer. Prism tailors inputs and outputs to the role. So a CFO can focus on CAC, margin risk, and pacing, while a Media Buyer sees the information about creative fatigue and the health of each Ad Set.

It can actually take actions (with approval)

Prism goes beyond suggesting changes. Using the same MCP connection to your ad platforms, it can also execute changes after your approval. Every action is logged with who asked, what changed, and why.

In action, it comes together to look like this:

Best context engineering-enabled prompts performance marketers can try:

Budget reallocation

Prompt: “Recommend a 15% budget shift for this week that improves blended ROAS without raising CPA. Include campaign and ad set level moves, confidence, and projected impact.

Creative fatigue triage

Prompt: “Find creatives with week‑over‑week ROAS down 20% or more and suggest two replacement concepts based on our top hooks.”

Wasted spend audit

Prompt: “Identify spend that did not meet CPA target in the last 14 days. Group by audience and placement. Recommend cuts or caps.”

Anomaly drill‑down

Prompt: “CPA spiked on June 14 while CTR improved. Explain why and suggest a fix.”

Audience expansion

Prompt: “List underused audiences and propose two net‑new segments based on recent conversions.”

Quality Control

Even with perfect context, AI outputs need review. Think of context engineering + guardrails as your new QA process.

Your “definition of done” checklist should cover:

- On-brand voice and style.

- Platform compliance (e.g., Google’s 30-character headline limit).

- Alignment with campaign goals and KPIs.

- Avoidance of bias, stereotypes, or sensitive claims.

Walden Yan, Co-Founder at Cognition AI, emphasizes that every action an AI takes carries implicit decisions — and conflicting or missing context can break results. A structured evaluation process prevents surprises.

Final thought

There are two things that really help me do my work better:

1) Clear instructions and expectations

2) The information I need to meet those expectations.

The first is important. The second helps me understand what the first actually means.

AI really isn’t any different from us in this regard. It’s not magic. You can’t just tell it to make something great without showing it what great looks like, or giving it the necessary ingredients.

Unlike us, though, AI is terrible at assigning or inferring meaning. If you don’t show it where your prompts fit into a larger picture, it’s not much better than a parrot, randomly squawking and hoping some of its noises sound close to what you want to hear.

So while prompt engineering will help you get better responses from your AI, it’s not enough on its own. You need to be able to provide context. And the marketers who learn to provide it will spend less time re-prompting, more time executing, and see better campaign performance.