I am the ‘human in the loop’ people are referring to when they talk about AI-powered marketing workflows.

My job is to advise B2C brands - our customers - on how to extract the most possible value from our AI models.

Our AI platform handles a lot of the bid and budget optimizations, uncovers new target audiences, and recommends creative variations to test. It can see across channels to unlock insights that would normally be hidden in the black box AI models on offer from Google and Facebook.

It does that work almost as a matter of course. For an AI, those are business as usual operations.

But somebody needs to ask the out-of-the-box questions, find ways to get the answers, evaluate ad campaign results in the context of business strategy (or even in the context of office politics).

That’s what I do.

Well, me and my partner: our purpose-built LLM for performance marketing. We call it Prism.

Here’s how Prism and I worked together last week.

Example 1: Crawl/Walk/Run Planning for H2

One of my clients recently reached out with a very specific challenge:

They wanted to grow their business from new interest-based audiences on Meta—but they didn’t want to jump in too fast. Their goal was to explore these audiences carefully, without sacrificing their return on ad spend (ROAS).

In short, they wanted a phased plan that would improve both reach and conversions over the short, medium, and long term.

How I Used Prism:

To start, I needed a quick but solid baseline analysis of their current campaigns and performance data. So, I turned to Prism, our AI-powered performance marketing assistant, and asked it for help in laying the groundwork.

To start, I just need to decide how I’m going to ask Prism for help. In this case, it was pretty straightforward:

It’s designed specifically for media planning and performance marketing. It understands ad platforms like Meta at a deep level. Unlike general-purpose tools like ChatGPT or Claude, Prism knows how to structure actionable plans around targeting, bidding, creative, and scaling.

What Prism Did:

Since Prism has all the access it needs; it identified where we already have strong performance from that audience segment, looked at ways we could expand that segment, and provided me with a crawl/walk/run plan prioritized by effort and impact.

It started with interest-based tests (Crawl), followed by seasonal DPAs and retargeting (Walk), and recommended scaling through high-value lookalike audiences (Run).

What I Did Next:

This is a fantastic start, but I didn’t just accept everything Prism suggested blindly. I:

- Interpreted the AI’s output carefully, deciding what was relevant, realistic, and timely for the client’s business.

- Asked follow-up questions in Prism to validate certain assumptions and clarify numbers.

- Ultimately, I grouped my recommendations into three clear priorities:

- Act: Immediately reallocate 15–20% of spend from low-performing markets (like France) to stronger ones (Norway, Italy, Spain) for quick wins.

- Recommend: Launch a DPA campaign focused on winter sports products, timed ahead of the seasonal peak, to capture demand.

- Ask: Explore a lookalike audience strategy, but only after initial testing proves its potential, since it would require more resources and longer-term buy-in.

Prism gave me speed and objectivity. I provided strategy and context.

Together, we moved from “what should we do?” to a concrete roadmap- in a few minutes.

Example 2: Creative Analysis – Are Our Static Ads Still Working?

Creative fatigue is one of the most common silent killers in performance marketing. So when a client came to me and said,

“Our static sale creatives feel a little tired. But are they really the problem?”

— I knew we needed more than just a gut check.

How I Used Prism:

I prompted Prism to analyze the creative in each ad against the rest of the sales ads in the account.

What Prism Did:

It evaluated performance of each ad based on multiple dimensions: CTR, ROAS, conversion rate, CPC, and more. It also looked for patterns across ad types, regions, and formats.

The response Prism came back with a lot of detail and some customer information, so I’m not sharing it here. Maybe another time.

But it did uncover some clear insights and recommendations:

- Ads with strong sale messaging (like "UP TO 50% OFF") had a 4.5% conversion rate, more than 2x that of static product-focused ads.

- Action imagery outperformed static poses significantly. The skiing shots had a ROAS of 32.7 in Canada, versus single digits for the lifestyle-focused creatives.

- Geography mattered. The same creative had dramatically different results in different regions, especially Canada vs the US.

In short, the data was clear:

Action > Static.

Urgency messaging > Brand-only messaging.

Localized relevance > Global uniformity.

What I Did Next:

Here’s where Prism handed me the baton, and I ran with it:

- I explained to the customer that while their static ads weren’t totally broken, their performance was capped during sale cycles because of the way they set up their creatives.

- I recommended building a 3-part urgency sequence using action footage:

- Prepare: Gear up for the season

- Launch: Sale is live

- Full Send: Final days—get it before it’s gone

- I layered in a creative twist: use regional variants to match local relevance. For instance, “Great North Sale” for Canada (where skiing extends longer) vs “End-of-Season Steals” for Europe.

- I also flagged potential quick wins: swapping out single-font headlines for designs with multiple font sizes and positioning key copy above the fold—another insight Prism surfaced.

The customer bought in. We briefed our design team that week and began testing the new sequence in two top-performing regions. It’s still early, but we’ve got some indicators this test is going well so far: higher engagement, improved CTR, and stronger ROAS right out of the gate.

Example 3: Speed to Insight & Validation on Ad Performance Softness

In this case, I needed to quickly figure out what was causing a drop in campaign performance across Europe.

I didn’t set out with a layered prompt strategy from the start. It unfolded naturally as I explored the issue. With Prism, you don’t necessarily need a highly engineered prompt or a solid plan. You can just start talking to it.

Here’s how it happened:

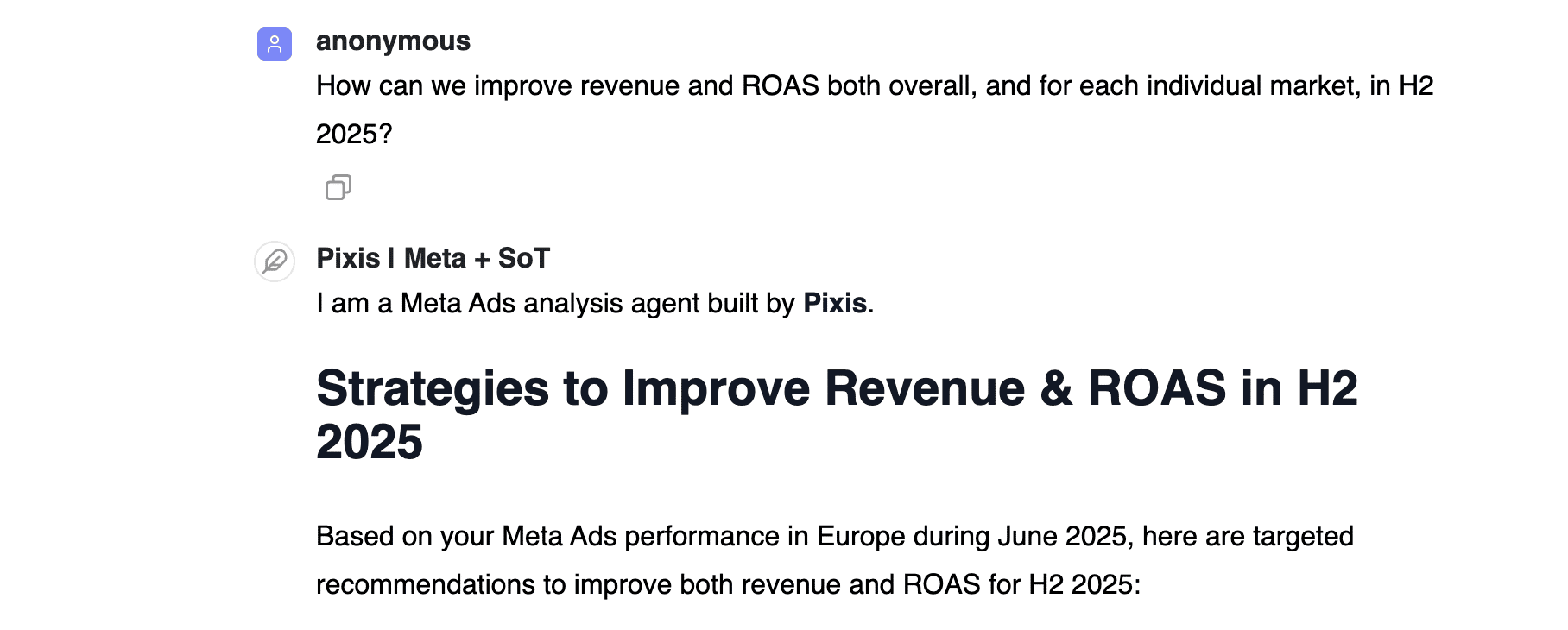

- First, I asked Prism to pull the latest campaign performance for Europe in June 2025.

- Once I had the basics, I realized I needed ROAS too, so I asked for that.

- I then asked Prism for direct insights into the performance softness. My exact prompt was:

What Prism Did:

Prism analyzes all of the data in the background. It gave me a section of recommendations for the EU broadly, some region-specific recommendations, and even some technical recommendations:

Prism analyzed everything in the background and gave me exactly what I needed:

- Clear recommendations at the Europe-wide level

- Specific budget shifts—down to how much to move—from lower-performing markets to higher-performing ones

- Suggestions to improve ROAS in weaker regions through creative changes, conversion path tweaks, targeting adjustments, and even channel mix shifts

This wasn’t some vague advice to “spend more on awareness.” It was granular, actionable, and tied to real data. The advice wasn’t a one-size-fits-all solution—it was tailored by market and channel.

What I Did Next:

Once I had Prism’s breakdown, I layered in my own judgment:

- I double-checked the seasonal patterns to confirm this wasn’t just expected fluctuation.

- I cross-referenced its market-specific suggestions with team resources and campaign plans.

- I worked with the team to implement some of the high-impact recommendations—focusing on optimizing creative and targeting in a few key markets and adjusting budgets as Prism suggested.

What stood out to me wasn’t just the speed of the analysis—but how grounded it was in the actual data. Prism didn’t suggest a broad-strokes shift in strategy. It showed exactly where the pressure points were, market by market, and how to act on them.

Without that kind of clarity, it would’ve been easy to either overreact or overlook the real opportunities sitting right in front of us.

What These Examples Prove (Again and Again)

At the end of the day, these aren’t just examples of AI tools doing what they’re programmed to do. They’re examples of something far more powerful—what happens when AI works with a human who knows when to zoom in, when to zoom out, and when to ask better questions.

Prism isn’t here to replace decision-making. It’s simply here to allow speed.

The real magic lies in how you use that speed:

- To act earlier,

- To test smarter,

- To shift strategies before the moment passes.

And that’s the difference between simply using AI and actually working alongside it.