AI is supposed to make us more efficient and more effective.

So tell me why many of the things I actually need AI to help me with result in a long back-and-forth that just leaves me pissed off, and with less time than when I started.

And I don’t think I’m alone here. Most marketers don’t actually get the benefits promised.

But (and believe me, I hate to say this), the reason most of us waste our time is actually our fault. The experts were right. Prompt engineering actually matters, and it’s definitely worth learning.

So I’ve been learning it.

In the past six months, I’ve taken four courses, read countless articles, watched too many YouTube videos, even started learning DSPy, a prompt framework developed at Stanford designed to replace prompt engineering.

Not to mention I’ve done in-depth analysis of the now over 100k conversations users have had with our own marketing LLM, Prism.

And I’ve gotten better! I do still have conversations with ChatGPT that end with me cursing it out. But I have far, far fewer than I used to, because after all the aforementioned learning, I figured it out a few things.

Why AI Wastes Your Time

1. Your prompts are vague.

When you say “analyze performance of my last campaign,” the model has to make a lot of guesses.

It guesses what KPI you care about, the date range you’re referring to, and the scope of the request. It may assume the last 30 days instead of the entire lifecycle of the campaign, prioritize CTR instead of ROAS, or look at the last campaign that ran instead of the last campaign that launched.

Being wrong about any one of those things compounds errors later in your conversation thread.

And it probably won’t tell you about the assumptions it’s made so you can correct them. Why? Because even it doesn’t know it’s making the assumptions.

Then you spend the next ten minutes figuring out what went wrong and correcting the guesses, at which point you’d probably have been better off just analyzing the campaign performance yourself.

2. It doesn’t know what good looks like.

If you imagine your AI is an intern, you’ll realize that it’s not enough to just give it instructions. Maybe you’ve had a teacher do that annoying “write me instructions to make a peanut butter and jelly sandwich” exercise.

Your LLM doesn’t actually know what a PB&J (or a weekly dashboard) is supposed to look like. At least, not from real experience. So every little instruction is easy for it to misinterpret.

But pair those instructions with a detailed picture (as a pdf, document, table or other file) of what you want the output to look like and suddenly you’ve given your AI a big leg up.

3. You’re asking for help with the wrong tasks.

And you’re probably trying to use AI for too many things.

If you want an off-the-shelf LLM to help with tasks that matter (writing campaign copy, analyzing results, putting together week/week reports, diagnosing creative fatigue, etc.), you need to teach it how to do each of those tasks in detail.

If you’re going to spend the time to teach it to do something, you should make sure that thing is recurring, and important enough for you to spend the time.

Instinctively turning to AI for an assist every time a task crosses your desk wastes time. Because most tasks we deal with are different each time, we either have to develop a prompt and give the AI context to fulfill the task anew, or we have to waste time on back-and-forth and end up just doing the damn thing ourselves.

In a nutshell, AI sucks because we expect it to make us faster. As a result, we don’t take our time, and it can’t actually make us faster.

How to Prompt AI so It Doesn’t Waste Your Time

Improve the specificity of your prompts

Give directions the way you would to a human analyst. In fact, this is a good litmus test. Write your prompt and bring it to a colleague with zero context about what you’re working on. Ask them if they’d be able to do the thing. If not, chances are good your LLM of choice won’t either.

Bad prompt:

Analyze performance for the last seven days.

Better prompt:

Review my Meta Ads Performance data for the period September 21 - September 27.

Focus on KPI trends, benchmark comparison, efficiency assessment, delivery health, conversion funnel, and anomaly detection.

Primary KPIs: ROAS, CPA, purchases

Efficiency metrics: CPM, CPC, CTR

Delivery metrics: impressions, frequency, spend pacing

Include only campaigns that spent more than $1,000 in the date rate.

Describe your desired output in detail

Humans are good at spotting signal in charts and tables. It’s harder to recognize quality insights from written words alone.

Luckily for us, LLMs have actually gotten pretty darn good at giving you good visual responses when you tell them what you need.

If you want speed, ask for a table and define the columns. This makes validation instant, and it lets you drop the output into Sheets or a BI tool without reformatting.

Example:

For all ads from the last 14 days, return a table with columns: ad name, ad set name, campaign name, total spend, female spend, male spend, female spend percent, male spend percent. Sort by total spend, descending.

This prompt would require your LLM to already have access to the data it needs, obviously. But if you’re not using Prism or another MCP-enabled AI tool, you can upload a csv and request it to transform the table into any other format you like.

You can do the same for cohort views, creative performance, geo splits, or funnel stage rollups. State the time window, filters, and columns each time. The model will respect it.

Related: 40 Detailed AI Prompts for Marketing Teams

Make your AI show its work

Trust comes from knowing what the model actually used.

Ask the AI to restate the basics at the top of the result:

- Data source used

- Date range

- Filters that were applied

- Any assumptions it had to make

- Caveats that might affect the result

For example, you can add this to the end of your prompt:

Before the table, list the dataset used, date range, filters, and any assumptions you made. Then give the table. Finish with a short caveats section.

This takes seconds to add to a prompt, and it saves minutes in review.

A mini playbook to prevent hallucinations

You might think it’d go without saying that your AI shouldn’t make stuff up. It doesn’t. Most LLMs are tuned to be sort of “people-pleasy”, which means they’ll do their best to reply to your request even if they’re not sure. And they might not call out that they’re not quite sure.

That said, you do not need heavyweight systems to reduce nonsense. You just need to make it clear that the best way to please you is by being sure of things.

Add a few lines at the end of each prompt to help encourage discipline:

Use only numbers present in the attached file, titled “Meta Ads Export 001).

If a metric is missing, state ‘not available’. Never estimate.

Show all calculations so totals and percentages can be verified.

If you are uncertain of anything, ask me a clarifying question before answering.

For numeric work, add a quick self-check:

Add a QC row at the bottom that shows whether female spend plus male spend equals total spend. Report Pass or Fail.

Your LLM might still make mistakes, but this does help reduce them. More importantly, when it does mess up, it’s fast and easy for you to diagnose what went wrong.

Turn multi-turn exploration into one reusable prompt

When a report or analysis is important enough, you might find yourself iterating five or six times to get the layout, filters, and definitions right. When you reach the output you like, ask the model to consolidate the session into a single prompt.

Template

We arrived at a good format. Write one reusable prompt that reproduces this exact output style, including the provenance section, QC check, and table columns. Replace any specific dates with ‘last 7 days.’ Keep it concise.

Save that prompt. Next time it is one and done.

QA and version your prompts like assets

Treat prompts the way you treat other reporting templates or excel macros. Give them version numbers and test them on a baseline file before trusting them with live work.

A simple QA flow:

- Choose a baseline dataset where you already know the correct totals.

- Run your prompt, compare totals and percentages to the source.

- Note any issues, fix the prompt, and rerun.

- Save as v1, v1.1, v1.2 as you improve.

- Record how long the task now takes. Track time saved.

You will be surprised how quickly a reliable library forms. The confidence payoff is real. Now I don’t feel like I’m going from zero-to-one every single day.

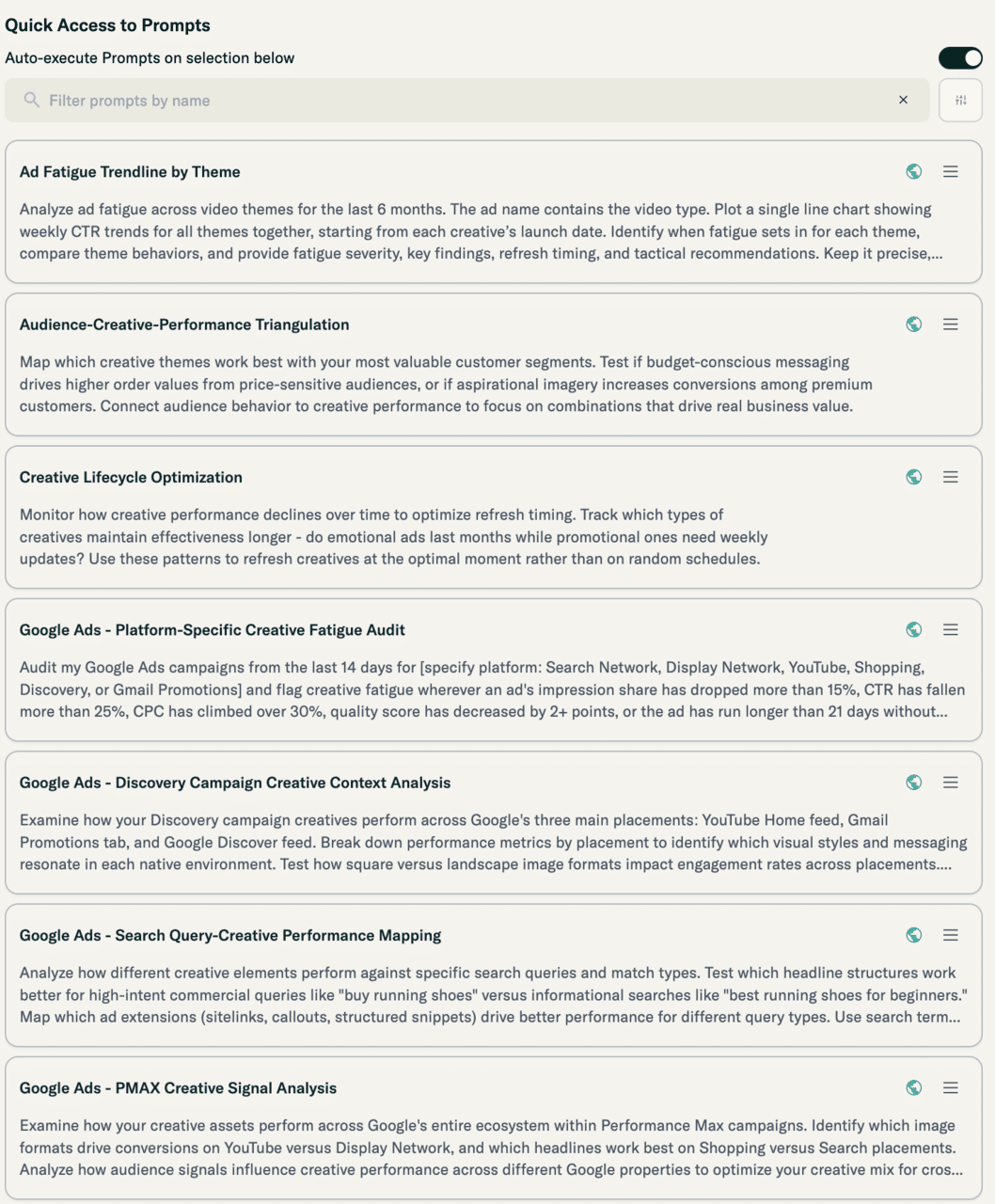

I keep my prompt library right in Prism, but you could keep yours in a Google Doc, OneNote, Notion, Airtable… whatever.

Here’s what my prompt library looks like today.

Keep outputs client-ready and compliant

Insights are not useful if they create risk. Add guardrails so the model stays within brand and legal limits.

Helpful lines:

- Do not make claims beyond the provided data.

- Do not infer causation. Describe correlations only.

- Keep wording factual and neutral. No superlatives.

- Flag any anomalies for human review rather than speculating.

Wrap this into your reusable prompts and you reduce last-minute edits.

Make outputs portable across your stack

If you plan to drop the result into a spreadsheet or a tool, say so. Ask for a clean, CSV-ready table with a header row and no commentary, or ask for pipe-delimited output when commas cause problems. You can even request a short label for the tab name.

Example add-on

After the provenance section, output only a CSV-ready table with a header row. No extra text after the table.

Portability prevents the most common time sink, which is manual cleanup.

Your prompt library, your leverage

Your end goal is a personal library that mirrors your workflow. Keep it simple. Each entry should capture the objective, required inputs, output format, and the small guardrails you rely on. When your process changes, update the prompt version and note what changed.

One way to speed this up is to let the AI draft the first version of your library. Give it three or four of your favorite prompts and ask it to write consistent, reusable templates with clear placeholders for dates, filters, and KPIs. Curate, test, and save.

Pulling it together

If you want AI to save you time, make the AI’s job easy, just like you would for an untrained intern.

Specify the slice of data, the KPI, the filters, and the table you want back. Ask for provenance and caveats so validation is fast. Add a few guardrails to reduce nonsense. Turn exploratory chats into one reusable prompt, then version and QA that prompt like a real asset. Keep outputs portable so they slide into your stack without cleanup.

Do this for a handful of recurring questions and you will feel the shift. Less back and forth. Less cognitive load. More trust in the numbers. And a lot more time to do the part only you can do, which is think.