The first time I ran competitor ad analysis, I did it badly. I pulled up the Meta Ad Library, scrolled through a few campaigns, saved some screenshots, and dropped them into a deck. It looked neat. But when I had to decide which creative to test next or whether to shift budget, those slides had nothing to offer me.

That was the turning point. I realized competitor ad analysis isn’t about building a gallery of what other brands are running. It’s about turning their ads into signals I can act on: which formats consistently survive, which themes fatigue fast, how promo intensity changes the auction, and how all of that shows up in my own CPCs and CPMs.

Once I started treating it like a workflow instead of a research task, it became one of the most valuable parts of my week. It gave me context for my numbers, sharper briefs for my creative team, and fewer surprises when the market shifted.

Here’s the process I follow today.

Step 1: Collect with intention, and do it on a cadence

I begin by narrowing the universe. I track only the three to five brands my buyers genuinely compare us with. Then I run a light weekly pass across the transparency sources that matter: Meta’s Ad Library for Facebook and Instagram, Google and YouTube’s ad transparency for search, display and video, and TikTok’s Creative Center for creative style cues. I filter by country so I am not mixing markets, and I only save ads that are clearly active or freshly refreshed. One-off digs do not tell a story. Weekly snapshots do. After a month of consistent capture you will see arcs: a competitor leaning hard into video, a sudden rush of discount creative, a quiet pullback from a placement that used to be central for them.

Step 2: Code what you capture so patterns can emerge

Folders of images do not produce decisions. Structure does. As soon as I save an ad, I describe it in a common vocabulary so I can compare like with like later. I note the format and placement. I rewrite the hook in one or two lines so I can search it later. I list the visible creative elements such as UGC, founder face, product demo, text overlays, before and after, or testimonial. I record whether there is an offer, what kind it is, and the value attached to it. I write down the proof mechanism, from star ratings and quantified outcomes to guarantees and awards, along with the exact call to action and the overall tone. I also log the date it first appeared in the library, whether it is still live, how many near duplicates sit in the set, and how quickly fresh cuts of the same idea are showing up. Those last three details become important once you start reading survival and fatigue.

Step 3: Turning Ads into Competitor Ad Analysis Signals

Once the ads are coded, I convert description into signal. I calculate survival days by counting how long a concept has remained live. Workhorse ideas tend to survive. I estimate creative density by looking at how many variants appear in a given week. Heavy density paired with short survival usually means fatigue or a search for a fix. I keep a rolling video ratio for the last thirty days so I can spot format pivots without guessing. I measure promo intensity as the share of ads that carry a discount, bundle or trial in the same window. Finally, I summarize the format mix so I can see whether a brand is truly video led or quietly relying on static and carousel.

These reads change how I interpret my own numbers. If my CTR dips in the same week a rival pushes promo intensity, I do not throw out my creative. I recognize that the audience is briefly price sensitive and I adjust expectations or run a counter message. If my CPM rises while multiple competitors surge their video ratio, I know I am feeling auction pressure on that placement and I can protect spend while I test an adjacent lane.

Step 4: Map messages to formats so creative teams get a real brief

Individual ads are less useful than the stories they repeat. Most brands revolve around a small set of narratives: the ease of a routine, price fairness, expert backed efficacy, community proof, or the emotional payoff. I cluster hooks, offers and proof devices into themes and then watch which formats consistently carry each theme. I often find that expert proof thrives in short UGC with on screen captions, while price clarity performs quietly in static sequences. That pairing is what I hand to creative. It becomes a one page map that says which messages are working in this market, which formats deliver them reliably, and three or four opening hooks rewritten in our voice rather than copied from anyone else. The goal is not imitation. The goal is to counter program with intent or to explore a neighboring angle that competitors have not exploited.

Step 5: Read budget posture from patterns rather than guessing spend

I never see a competitor’s exact budgets, but I can infer confidence and pressure. Long survival paired with a measured refresh cadence usually means they are sitting on an efficient engine. Frantic refreshes combined with rising promo intensity usually means acquisition costs are biting or inventory needs movement. Sudden format swings suggest a reset in testing or a scramble to recover performance. I check those patterns against my own CPC and CPM curves. If I see a pullback in Google Shopping while my returns hold, I scale there. If the market floods video and my CPMs climb without a matching lift in CTR, I shelter that placement and shift tests until the pressure subsides. The point is to avoid flying into headwinds I could have seen coming.

Step 6: Add guardrails so your read stays honest

Before I label anything a win or a trend, I make sure I am comparing apples to apples. I match geography, device mix and placement so I am not mixing signals. I flag promotion windows so I do not give a seasonal discount credit for a format’s success. I look at the landing page because ads do not convert in a vacuum. And I give ideas enough time to breathe so I am not generalizing from what was launched yesterday.

At the end of a weekly pass I share four things. I circulate the coded CSV so anyone can check the receipts. I publish a single page that maps dominant themes to the formats that seem to carry them. I outline a two week test slate that converts those patterns into hypotheses with a single primary metric for each stage of the funnel. And I write a short budget note that states where we plan to lean in and where we plan to protect. That rhythm is what turned competitor research from a nice to have into a lever.

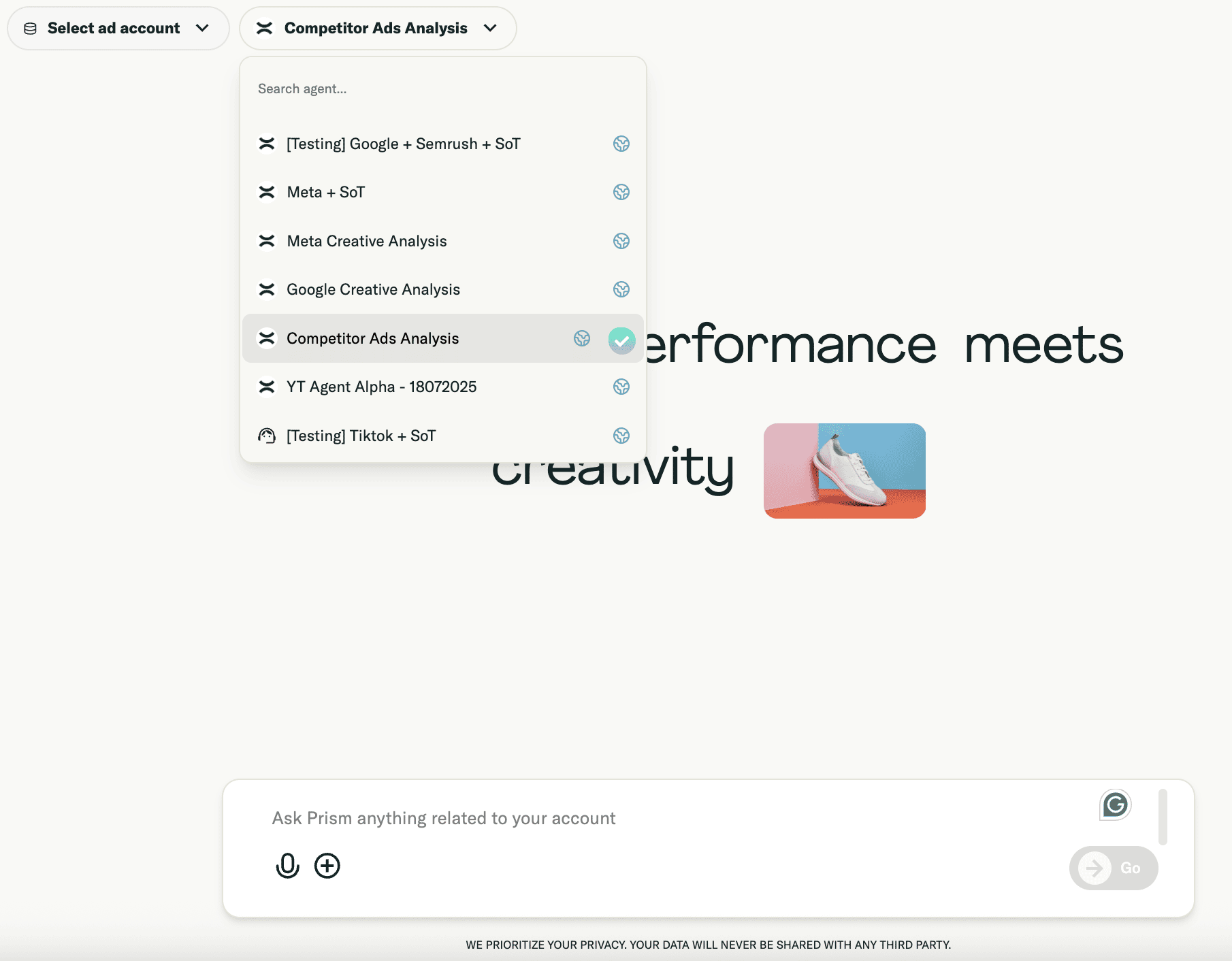

Step 7: Keep the loop alive inside Prism

I ran this entire process by hand long enough to know where it becomes a tax. Coding ads into a spreadsheet is not hard, but it is time consuming. Cross checking survival and density against my CTR, CPC, CPM and conversion curves is even more time consuming. By the time the picture is assembled, the market has often moved. This is where I rely on Prism to remove the busywork and keep the loop closed

Instead of toggling between Ads Libraries, dashboards, and Excel, I could see competitor activity mapped against my own performance. It wasn’t just what they were doing — it was why it mattered in the context of my campaigns.

Here's a prompt that I used:

Research [competitor company name]'s digital advertising presence to uncover their creative strategies, messaging patterns, platform preferences, audience targeting tactics, and campaign performance indicators with specific recommendations for competitive advantage.

Prism gave me that toggle: market-level visibility when I needed context, campaign-level detail when I needed to act. The judgment stays with me. The orchestration and correlation live in the tool.

Closing Reflection

Competitor insights are not about copying. They’re about context. They’re the mirror that shows you whether you’re efficient or just lucky, whether your CPCs are fair or inflated, whether your creative tests are lagging or leading.

When you filter the noise and focus on the signals that matter, you stop chasing competitors and start using them to sharpen your own strategy. That’s when competitor research stops being a time sink and becomes a competitive edge.