Your ad creative looked great in the design review. Three days into the campaign, your CPA is double what you projected and your CTR is tanking.

The culprit is rarely bad design. It's one of six structural mistakes that drain budgets before you realize what's happening. These include vague success metrics, audience drift, weak hooks, wrong formats, too many variants, and under-funded tests. This guide walks through each mistake, why it kills performance, and how to catch it before it costs you.

The real cost of common ad creative mistakes

Ad creative mistakes fall into a few buckets: low-quality visuals, unclear messaging, poor audience alignment, and skipping tests. A pixelated image tanks your credibility. Generic stock photos feel fake, and overly branded ads get scrolled past.

The financial hit is immediate. Wasted impressions mean wasted budget. Poor creative drives up your cost per acquisition and drags down return on ad spend. You lose time diagnosing what went wrong instead of building the next campaign.

Most marketers know creative matters. Yet the same mistakes show up across campaigns, platforms, and brands. The gap between knowing what good creative looks like and actually producing it consistently is wider than it seems.

Mistake one: vague success metrics kill performance

If you don't define success before launching, your creative can't deliver it. Goals like "increase awareness" or "drive engagement" leave your team guessing. Your ad platform's algorithm guesses too, optimizing for clicks when you actually needed conversions.

A campaign optimized for traffic sends visitors who bounce. A campaign optimized for engagement racks up likes but zero purchases. The creative might look great, but it's solving the wrong problem.

Clear metrics anchor everything. They tell you what to test, what to iterate, and when to kill an underperformer.

Key indicators to track before launching

Start with outcomes, not outputs. ROAS tells you if your spend is profitable. CPA shows what each customer costs. Conversion rate reveals how well your creative moves people through the funnel.

Then layer in efficiency signals:

- CTR (click-through rate): Measures how compelling your hook is.

- CPM (cost per thousand impressions): Indicates audience saturation and competition.

- CPC (cost per click): Shows whether your creative resonates enough to earn attention.

Track delivery health too. Impressions, frequency, and spend pacing reveal whether your campaign is reaching people or stalling out. High frequency with low conversions means you're showing the same ad to the same people too many times.

Simple prompt template for setting goals

Before you brief your team or open your design tool, write this down:

This campaign will succeed if [specific metric] reaches [specific number] by [specific date]. The creative's job is to [specific action the viewer takes]. We'll measure this by tracking [two to three KPIs].

Example: This campaign will succeed if ROAS reaches 4.5 by October 15. The creative's job is to get clicks from women aged 25–40 interested in sustainable skincare. We'll measure CTR, CPA, and purchases.

This clarity cascades. Your designer knows what emotion to evoke. Your copywriter knows what objection to address. Your media buyer knows which campaign objective to select.

Mistake 2: target audience drift makes good creative irrelevant

Audiences evolve faster than most marketers realize. Interests shift. Platforms change how they categorize users. Competitors enter and fragment attention. What worked six months ago stops working, and you're left wondering why your winning creative suddenly feels flat.

Audience drift is invisible until performance drops. By then, you've already spent budget on impressions that didn't matter. The creative wasn't bad—it just wasn't talking to the right people anymore.

Audience overlap reports to watch

Most ad platforms offer audience insights that reveal demographic and behavioral shifts. Check monthly at minimum. Look for changes in age distribution, gender split, and top interests. If your core audience used to skew 25–34 and now skews 35–44, your creative needs to reflect that shift.

Geographic performance matters too. A region that used to convert well might now show high CPMs and low engagement. That's a signal to either refresh creative for that market or reallocate budget elsewhere.

Watch for audience saturation. When the same users see your ad repeatedly without converting, it's time to expand targeting or rotate creative. Frequency above three often signals diminishing returns.

Refreshing personas with real-time signals

Your personas aren't static documents. They're hypotheses you validate or disprove with every campaign. Use platform data to update them. If your ads perform better on weekends, your audience's decision-making window might be different than you assumed.

Behavioral signals matter more than demographic assumptions. Someone who engages with competitor content, watches product demos, and visits comparison sites is showing intent. Creative that speaks to that intent converts better than creative aimed at a generic persona.

Update your personas quarterly. Note what's changed. Adjust your creative briefs accordingly.

Mistake 3: weak hooks and repetitive visuals cause ad fatigue

You have three seconds to stop someone from scrolling. If your hook doesn't land, nothing else matters. Weak hooks—generic questions, obvious statements, slow-building narratives—get ignored. Repetitive visuals make your ad invisible even if someone sees it five times.

Ad fatigue is the silent budget killer. Your cost per result climbs. Your reach plateaus. Your frequency spikes. The algorithm shows your ad less often because users stop engaging.

The fix isn't just creating more ads. It's creating ads that break patterns and feel fresh even when someone sees them multiple times.

Pattern break tactics for the first three seconds

Start with movement or contrast. A static image of a product on a white background blends in. A hand reaching for that product, or the product in an unexpected context, stops the scroll.

Video works well here, but only if the first frame is compelling. Don't fade in. Don't start with a logo. Start with the moment that makes someone curious.

Use text overlays sparingly but strategically. A bold, clear statement in the first second can work if it challenges an assumption or promises a specific outcome. "You're overpaying for this" beats "Introducing our new product."

Rotating concepts every seven days

Build a rotation schedule. Every seven days, introduce a new concept or variation without starting from scratch. You can change the hook, swap the background, adjust the opening line, or test a different format.

Keep your brand consistent but vary the execution. Same message, different delivery.

Mistake 4: wrong asset formats and ratios waste impressions

Every platform has format requirements. Miss them and your ad gets cropped, rejected, and shown in lower-quality placements. A square image optimized for feed gets cut off in Stories. A horizontal video looks awkward in Reels.

You lose impressions, and the impressions you do get perform worse because the creative doesn't fit the space. Platforms publish their specs. The problem is that most teams treat format as an afterthought, resizing one master asset at the last minute.

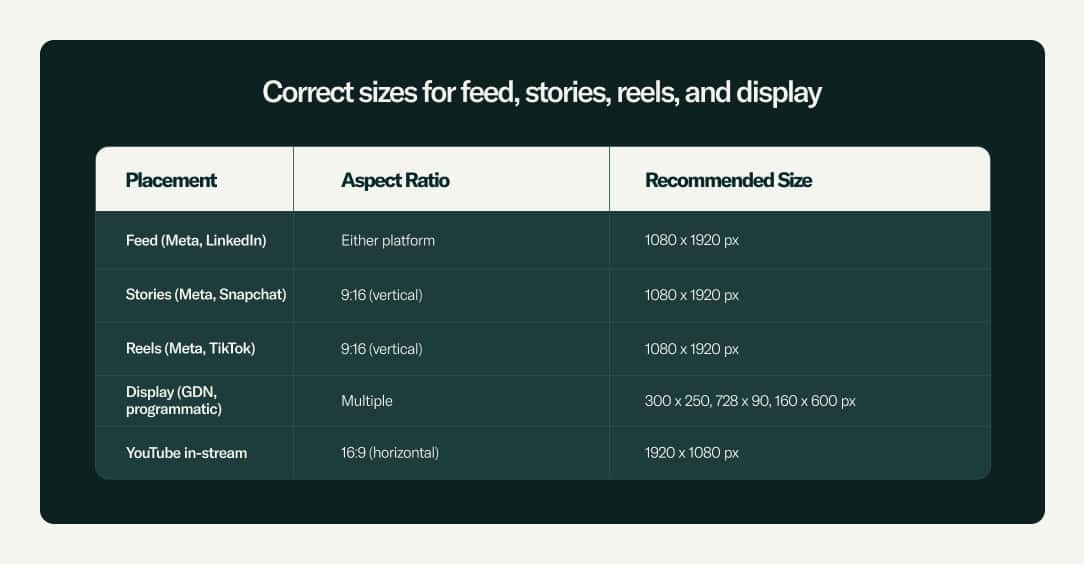

Correct sizes for feed, stories, reels, and display

Here's what you need for the most common placements:

Design for vertical first if you're running on social platforms. Vertical video captures more screen space on mobile and typically drives higher engagement. Then adapt to square and horizontal as needed.

Keep key elements—text, faces, logos—in the safe zone. Platforms crop differently depending on device and placement. Leave 10% margin on all sides to avoid critical elements getting cut off.

Universal backup creatives for edge cases

Sometimes your ad shows in a placement you didn't anticipate. Prepare by creating flexible backup assets. Use center-weighted compositions so the focal point stays visible even if the platform crops unexpectedly.

Avoid text-heavy designs that become unreadable at smaller sizes. Test your creatives across devices before launching. What looks great on desktop might be illegible on mobile.

Mistake 5: too many or too few ads per set confuse algorithms

Ad platforms use machine learning to figure out which creative performs best. Give the algorithm too many options—10 or 15 ads per ad set—and it spreads budget thin, never learning which ads truly work. Give it too few—one or two ads—and you miss opportunities to test variations.

The sweet spot exists. It's narrow, and it varies slightly by platform, but the principle holds: enough variety to learn, not so much that learning stalls.

Optimal ad count for Meta learning phase

Meta's algorithm needs about 50 conversion events per ad set to exit the learning phase. If you're running five ads in one ad set, that's 10 conversions per ad before the system stabilizes. If you're running 10 ads, it's five conversions per ad, which might take weeks depending on your budget.

Start with three to five ads per ad set. The algorithm gets room to test without fragmenting your budget. Each ad gets enough delivery to generate meaningful data.

Once you have a winner, don't kill everything else immediately. Let the top performer take 60–70% of spend while the others continue running at lower volume. This prevents over-reliance on a single creative and gives you backup options when fatigue sets in.

When to pause or add variants

Pause an ad when it's consistently underperforming for three to five days and its metrics are significantly worse than your set average—think 50% higher CPA or 50% lower CTR. Don't pause based on one bad day.

Add variants when your top ad starts showing fatigue. Watch for rising CPMs, declining CTR, or increasing frequency. Introduce a new angle or format before performance drops sharply.

If all your ads are fatiguing at once, the problem isn't the number of ads. It's audience saturation. Expand targeting or rotate concepts entirely.

Mistake 6: under-funded tests end before they learn

Testing creative costs money. Not enough money, and your test ends before reaching statistical significance. You make decisions based on noise instead of signal. You kill a potentially winning ad because it didn't get enough impressions to prove itself.

A test that runs for two days with $50 in spend tells you almost nothing. Yet teams draw conclusions from it anyway, then wonder why their "data-driven" decisions don't improve performance.

Minimum spend thresholds by platform

Each platform has a different cost structure, but the principle is the same: you need enough spend to generate enough events for the algorithm to optimize.

- Meta (Facebook, Instagram): $20–$50 per day per ad set for conversion campaigns. Plan for at least five to seven days to exit learning phase.

- Google Display: $10–$30 per day per campaign. Display is cheaper per impression but often requires more volume to see patterns.

- TikTok: $50–$100 per day per ad group. TikTok's algorithm learns fast but needs higher initial spend to find the right audience.

- LinkedIn: $100–$150 per day per campaign. LinkedIn's CPMs are higher, so tests cost more.

If your conversion rate is low or your product has a long consideration cycle, you'll need more budget to gather enough data.

When to cut versus iterate

Cut an ad when it's clearly losing after sufficient spend—typically 2–3x your target CPA with no conversions. At that point, more spend won't change the outcome.

Iterate when an ad shows promise but falls short. For example, an ad might have a strong CTR but a weak conversion rate. That's a landing page problem, not a creative problem. Maybe impressions are high but CTR is low. The hook isn't working—try a different opening.

Don't iterate mid-test. Let the test run its course, analyze the results, then make changes.

How AI spots these mistakes before your budget suffers

The six mistakes we've covered drain budgets and waste time. Their common thread is that they're all detectable early if you're watching the right signals. The problem is that humans aren't great at monitoring dozens of campaigns, hundreds of ads, and thousands of data points simultaneously.

AI is. It doesn't get tired, doesn't miss patterns, and doesn't wait until performance tanks to flag an issue. We at Pixis built our platform around this insight: the best way to avoid creative mistakes is to catch them before they cost you.

Automated alerts for fatigue and drift

We monitor your campaigns in real time, tracking frequency, engagement drop-offs, and audience saturation. When an ad starts showing fatigue—rising CPMs, declining CTR, increasing frequency—you get an alert before performance craters.

You can rotate creative proactively instead of reactively. The same goes for audience drift. If your top-performing audience segment starts underperforming, Pixis flags it as it's happening, not three weeks later when you're reviewing reports.

Generative suggestions for new angles

Catching problems early is half the solution. The other half is knowing what to do about it. Pixis doesn't just tell you an ad is fatiguing—it suggests new creative angles based on what's working across your account and similar campaigns.

Same brand voice, different execution. This isn't about replacing your creative team. It's about giving them a starting point so they're not brainstorming from scratch every time you need a refresh.

Turn insight into action faster with automated creative refreshes

Knowing you have a problem is useful. Fixing it quickly is what actually saves your budget. The gap between insight and action is where most marketing teams lose time and money.

You see the data, you know what to change, but the creative refresh process—brief, design, review, resize, upload—takes days. AI closes that gap by automating the repetitive parts so your team can focus on strategy and creativity.

Four-hour creative refresh workflow

Here's what a rapid creative refresh looks like with AI assistance:

- Hour one: Identify the underperforming ad and the specific issue. Is it the hook? The visual? The offer?

- Hour two: Generate new variations. Use AI to draft new hooks, suggest visual concepts, or adapt top-performing elements from other campaigns.

- Hour three: Design and resize. AI handles format adaptation—taking one concept and outputting it in every size and ratio you need.

- Hour four: Upload, test, and monitor. Launch the new creative and set up automated monitoring so you know within 24 hours if it's working.

Complex campaigns or brand-new concepts take longer. But for routine refreshes—swapping a hook, testing a new background, rotating a CTA—four hours is realistic when you're not starting from zero every time.

Try Prism and see how AI catches creative mistakes before they cost you

FAQs about ad creative mistakes

How much budget should I allocate for creative testing each month?

Allocate 10–20% of your total ad budget for testing. If you're spending $10,000 per month, set aside $1,000–$2,000 for creative tests. Scale that percentage up if you're in a fast-moving market or launching new products.

How quickly should I refresh static images compared to video ads?

Static images fatigue faster than video—usually within seven to 10 days at normal frequency levels. Video can last two to three weeks before showing fatigue, especially if the first three seconds vary across versions. Monitor frequency and CTR as leading indicators.

Can automated creative tools maintain my brand voice and guidelines?

Yes, if you set them up correctly. Our AI tools learn your brand guidelines—tone, color palette, logo usage, and messaging frameworks—and apply them consistently. The output isn't a finished product you publish blindly. It's a strong first draft that your team reviews and refines.