If you’ve used ChatGPT, Gemini, Claude, or any other LLM, you probably noticed that their usefulness is limited to the amount of context they have.

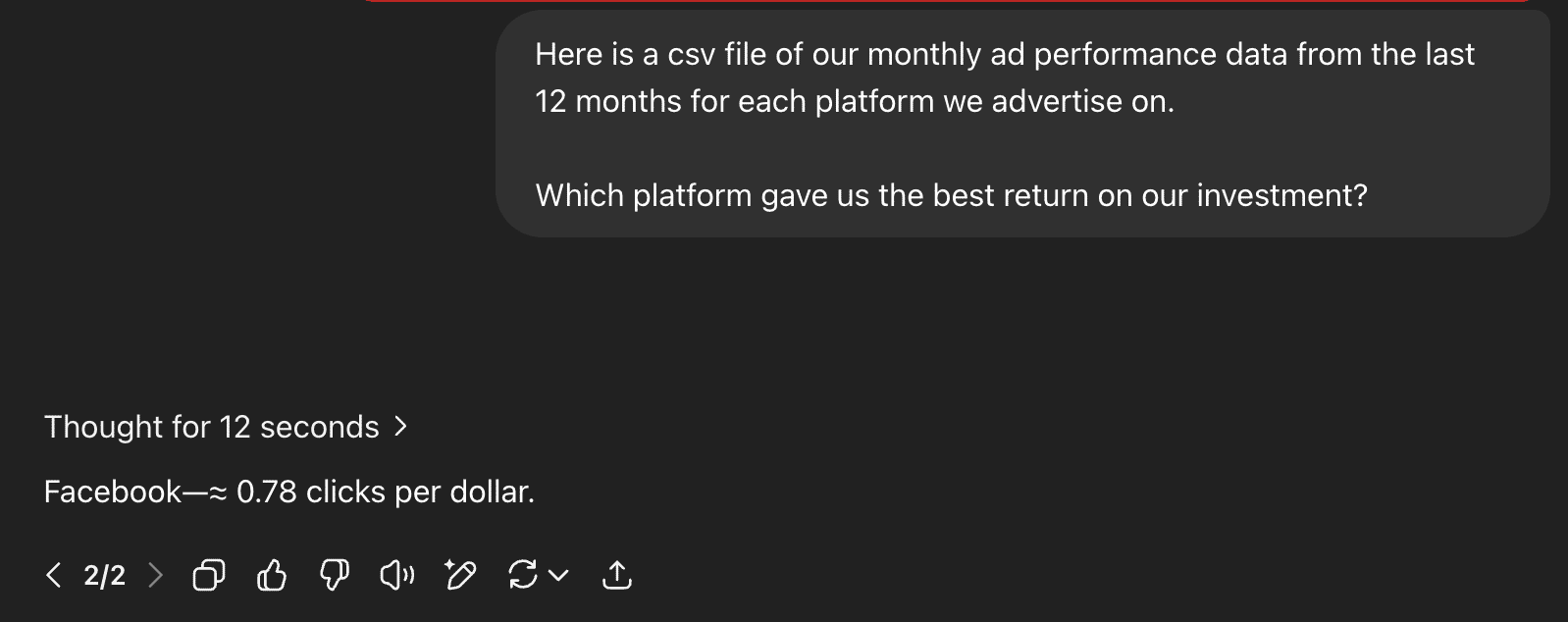

Here’s a real conversation I had with ChatGPT (the 4o model):

I admit I asked ChatGPT to keep its responses short for the purposes of efficient screenshotting, but still: “clicks per dollar”?

Really?

The glaring lack of understanding about basic marketing metrics makes me doubt the very number, even though it’s a simple calculation.

This is the context gap in action. It shouldn’t be this dumb, but it’s not its fault.

It wasn’t designed specifically for performance marketing, and it doesn’t have enough access to your performance data to be useful even if it were.

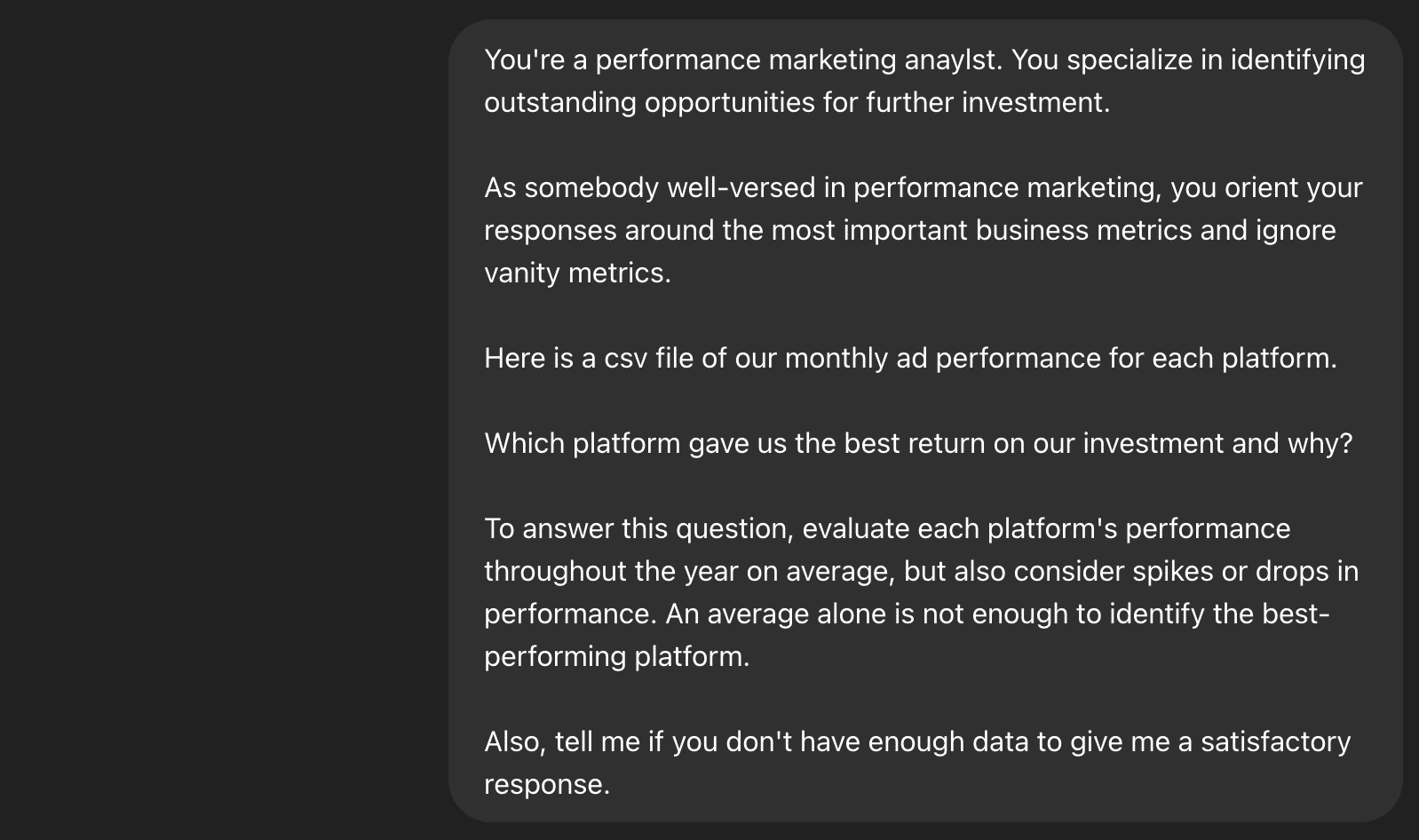

Spoiler alert! This is what it should have said:

ChatGPT and other LLMs have access to an incredible amount of information, but they still don’t know enough to be truly useful to marketers. At least, not without some help.

Closing the Gap Takes (Too Much) Effort

Of course, I could ask ChatGPT to elaborate, explain its reasoning, and give it much more context in the prompt itself.

After all, lots of people claim you (and your bad prompts) are the reason ChatGPT isn’t great.

That prompt didn’t take long to write: about 90 seconds.

I loosely applied the RISEN framework (Role, Instructions, Steps, End goal, Narrowing) to build a prompt that provided ChatGPT with a little more context.

I just had to spend months learning the basics of prompt engineering, experimenting with what works and what doesn’t, and predict where it might go wrong or make assumptions and pre-empt those.

Easy, right?

Let’s see how ChatGPT fared this time.

Some of that information is genuinely a little helpful. I like that it calculated Facebook’s standard deviation, even if it was calculating it for a made-up metric.

But it’s still essentially taking an average of four columns and telling me which numbers are bigger than others. Not breathtaking.

And there are other obvious problems:

- It didn’t identify that clicks per dollar isn’t a business outcome.

- It didn’t clarify what metric I thought defined “best performance” or tell me where it needed more data to give me an answer I actually want.

- It also didn’t catch that I only spent $37k on Audience Network, but over $5m on Facebook.

I wouldn’t build a strategy on this response and I don’t think it’s even helping me brainstorm a better strategy myself at this point.

Looks like I didn’t reach the effort threshold in my prompt.

The Effort Threshold

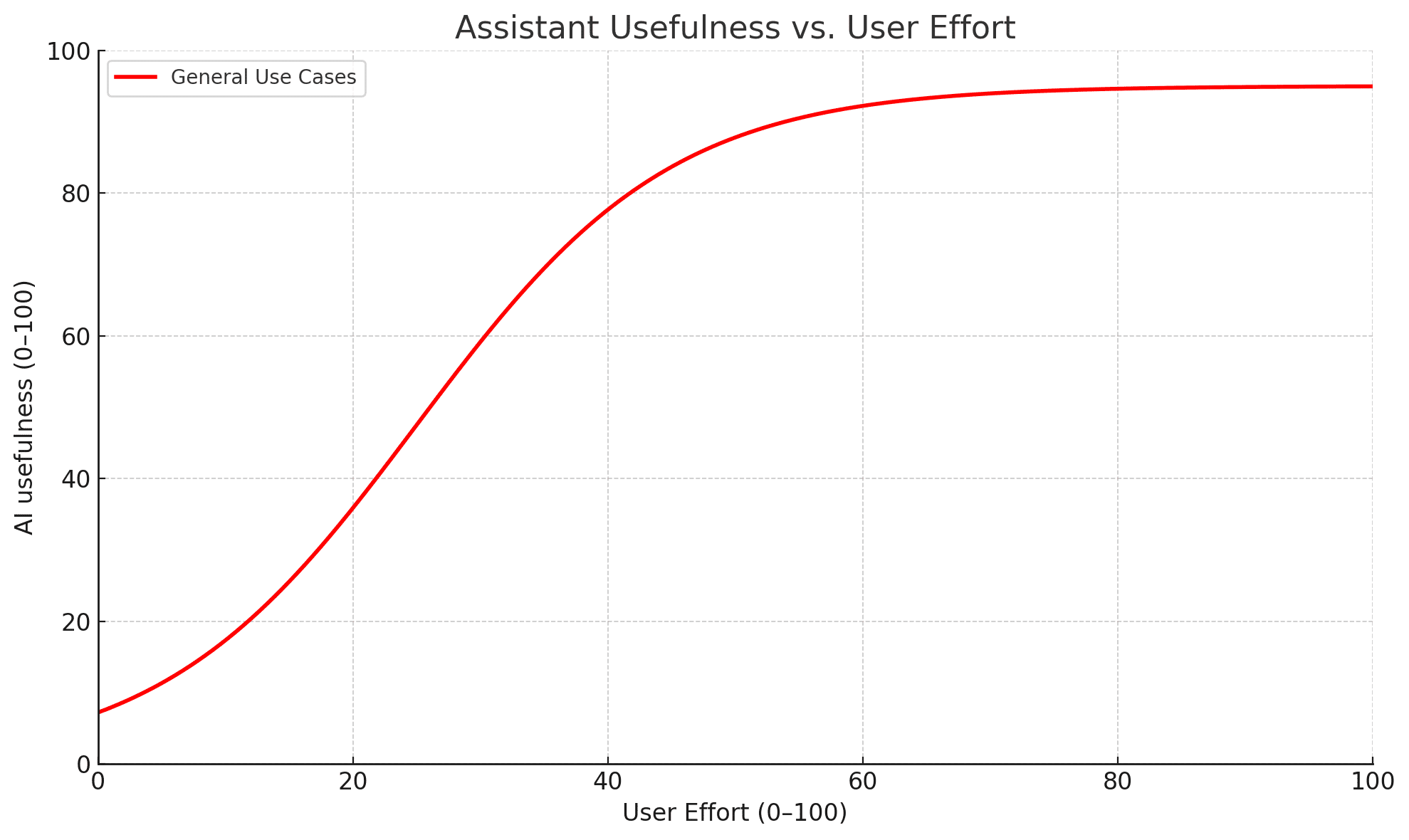

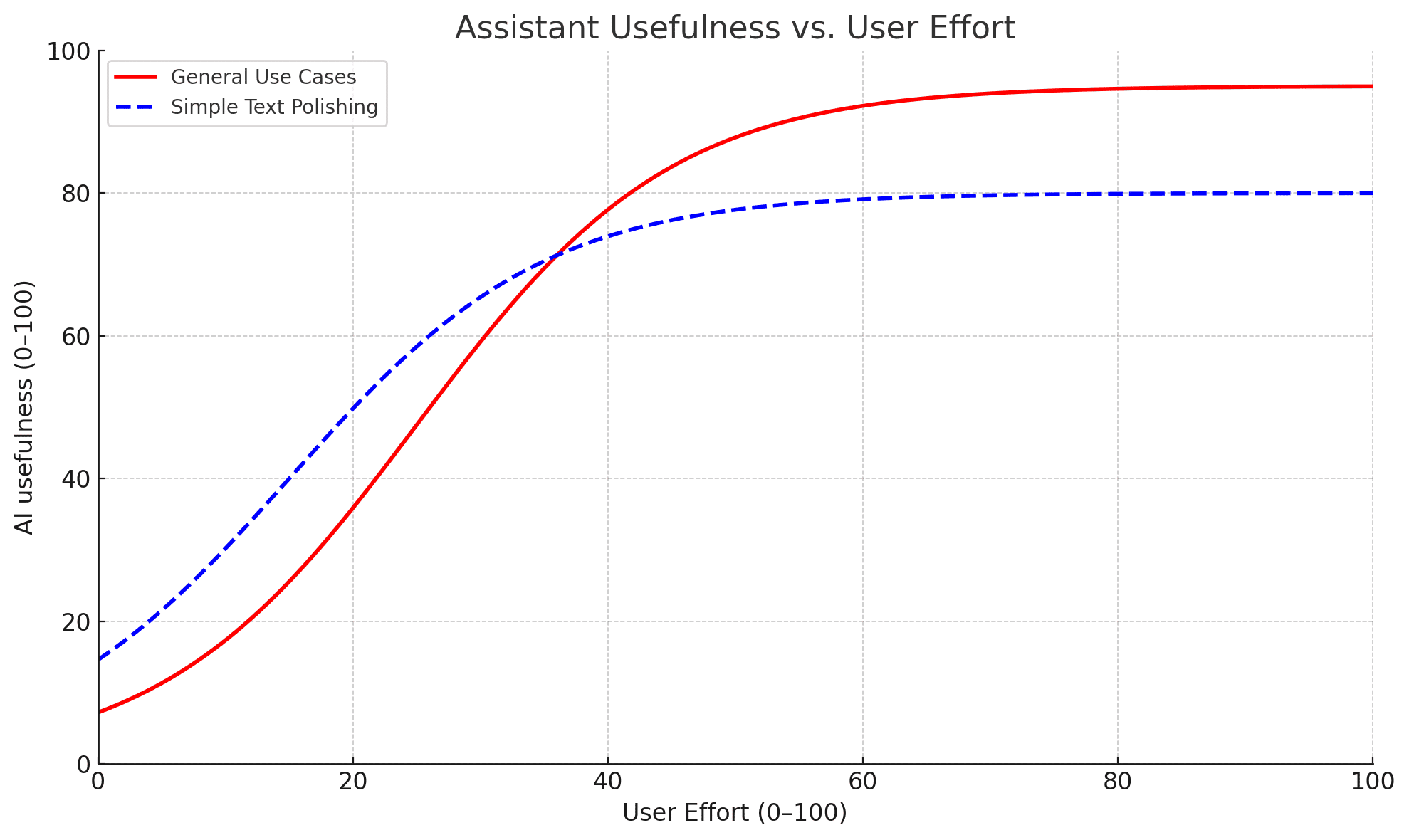

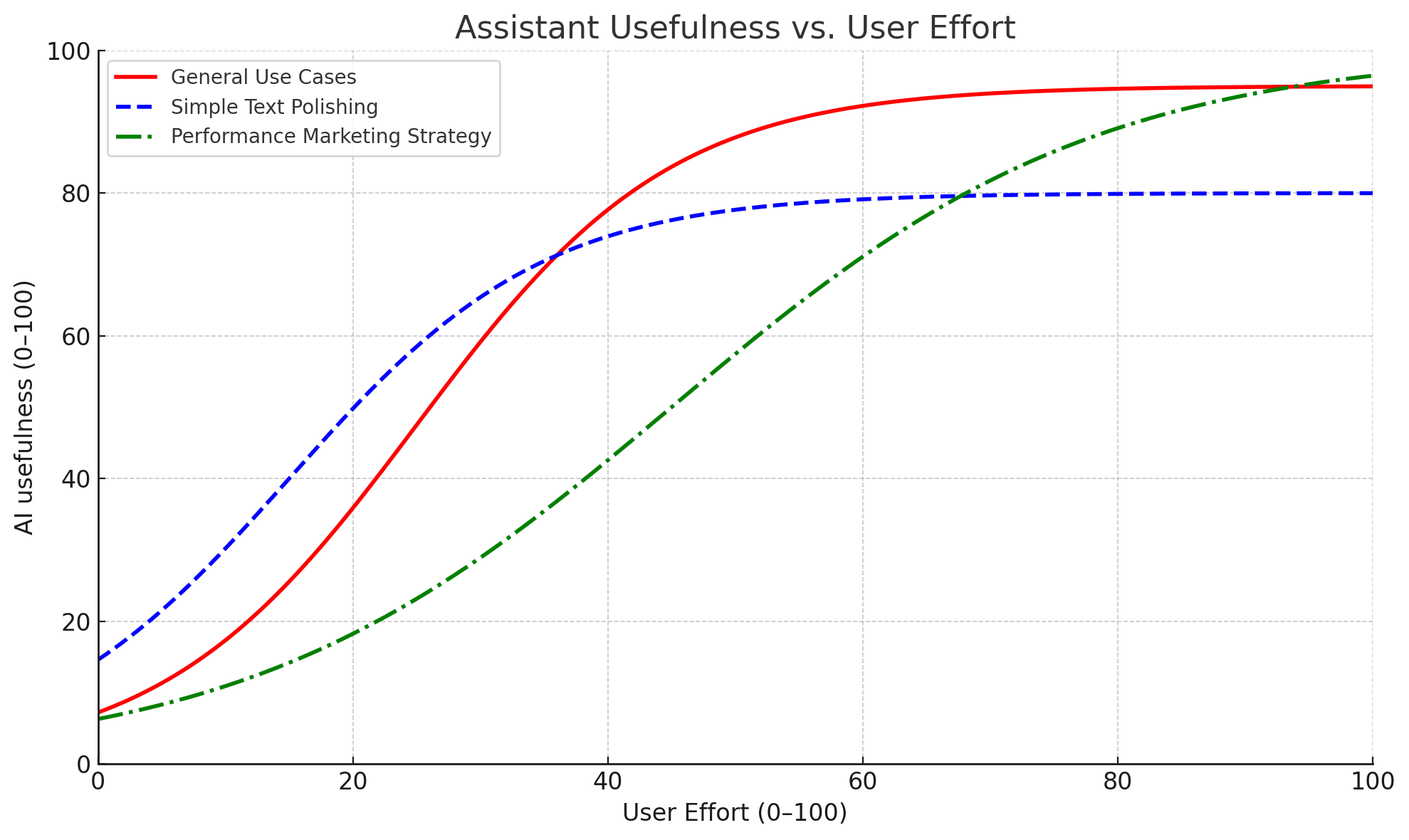

When a user provides more context, structure, direction and raw data, just generally putting more effort into their prompt, LLMs are able to provide more value back.

Simple requests require less effort for the user to get the value they want. But they also provide less value in return.

Complicated requests require more effort, but you’re asking the LLM to do more work for you, so the response is also more valuable. But the value of the LLM’s response doesn’t increase linearly with the amount of effort you put in, and the curve changes depending on the type of request.

For most use-cases, a user could put in about 40% effort and get back 80% usefulness.

Usefulness increases more quickly with very narrow, well-defined use cases like editing text for typos and grammar. But the potential total usefulness is also lower, and adding more effort to the prompt doesn’t change that.

But when you consider more advanced use-cases, like weighing in on performance marketing strategy, a basic LLM requires more effort to provide valuable output.

The Two Reasons That’s About to Change

The reason ChatGPT, Claude, Gemini and the other more generally available LLMs require more effort is because

- They’re built for general purpose usage. That’s why they’re trained on essentially the entirety of the publicly available internet.

- They don’t have real-time access to the data they need to perform marketing-specific tasks and analysis.

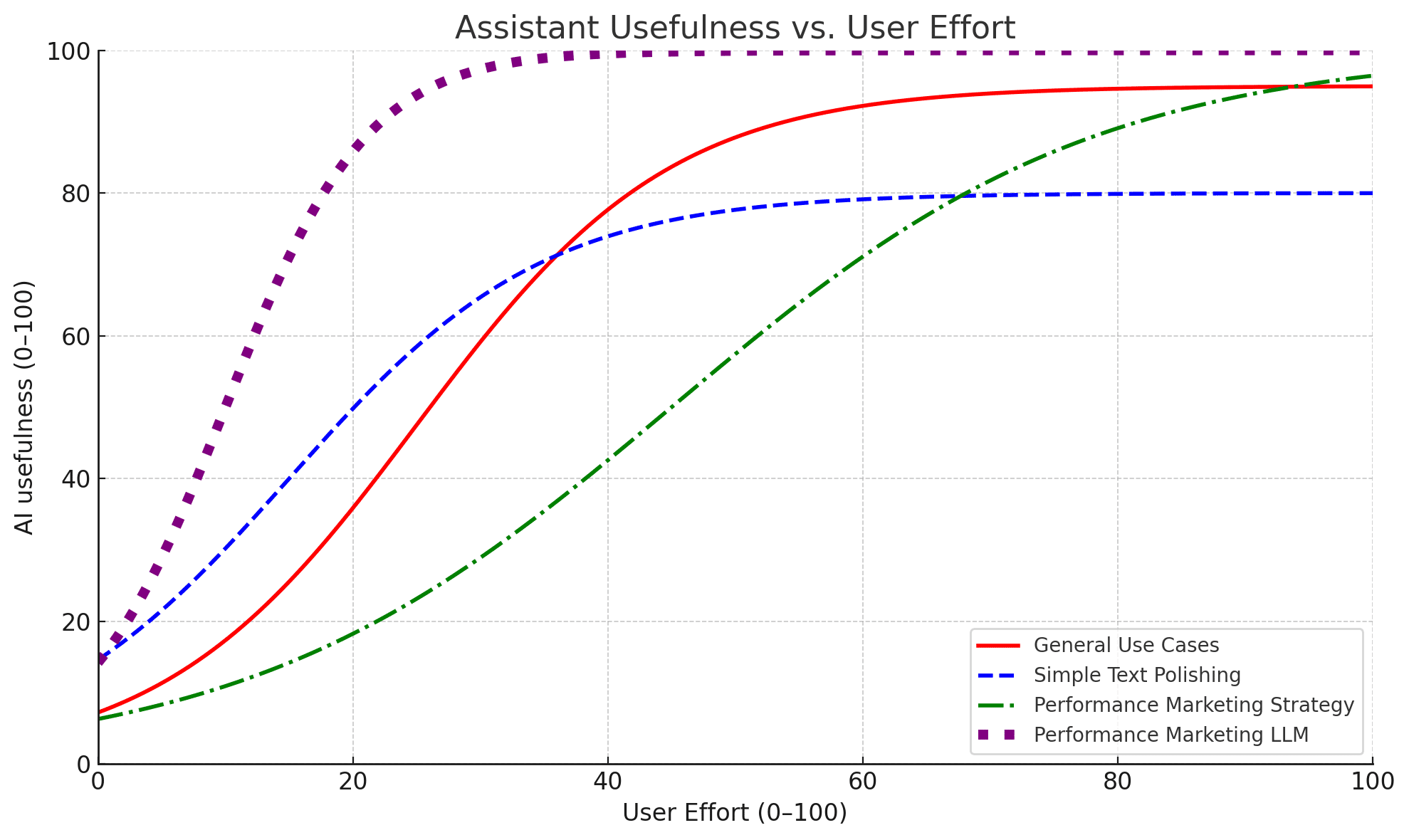

When you have a specific use case, you need a model that’s purpose-built to handle that use-case, and it needs to have unfettered, real-time access to any data it would require to give you a quality response.

When you’ve got a purpose-built LLM with access to resources, you get something like this (the purple dashed line):

Less effort, more value.

What Actually Changed That Made this Possible Now?

I mean, we built it.

We’re not special in that regard. There are a lot of AI agents that at least claim to be for marketers now.

But ours has been in the works for a long time. It’s trained on over $2.5b in real ad spend and plugged directly into your Meta Ads, Google Ads, and Shopify. We’re adding new platforms it can access and agentic capabilities very, very quickly.

So it just works. It can perform creative fatigue analysis, cross-channel performance analysis, recommend ad variations, recommend new influencers to work with, analyze competitor’s ads for visual themes, messaging and formats, and… almost anything else you’d want to ask about.

Example: How to Uncover Creative Fatigue Quickly

No mega-prompt gymnastics, no CSV acrobatics, no hallucinated “clicks-per-dollar.” Just a simple question and insights faster than I could’ve done myself.

Caveat: this is not a replacement for your brain.

A human’s critical thinking, curiosity, and creativity are all still necessary. Maybe more than ever.

But the speed to insight really matters. Think how many mundane questions you spend hours answering every week just because the data is hard to reach. Or how many questions you don’t bother trying to even ask because it’d take a while to find out if you can answer them.

So when someone claims their chatbot “kind of sucks” at marketing, I urge you to point them here. Not because it can do the marketing for you, but because it’ll help you do your marketing work better and faster.