Backpack Language Models: Tackling Gender Bias In Generative AI

In the age of gender inclusivity, even machines need to be wary of getting canceled by the public. Artificial intelligence (AI) has made great strides in language generation, but language generation models have also brought to light certain biases embedded within the models. A critical one being gender bias, where AI-generated content can inadvertently perpetuate stereotypes or reinforce unequal representations.

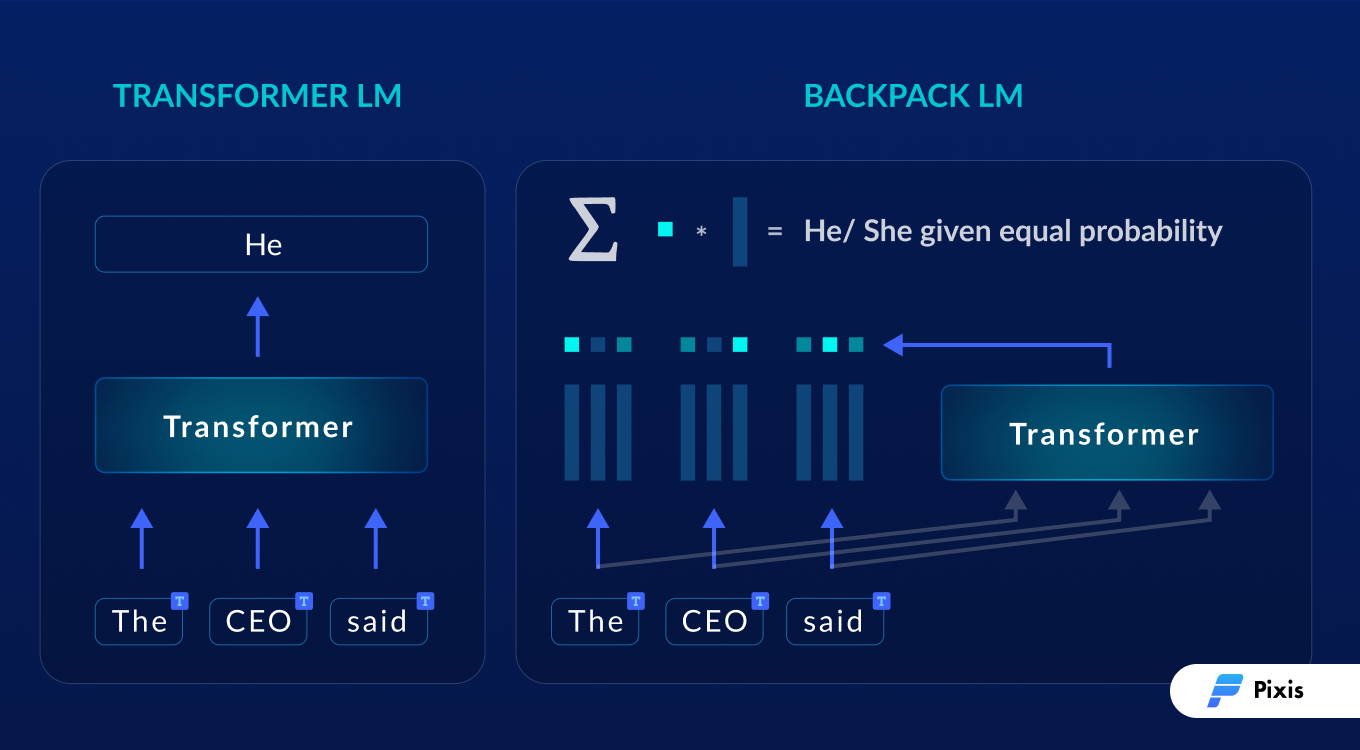

Backpack Language Models offer a potential solution towards addressing the issue of stereotyping gender roles when generating content, and offer a pathway to mitigate gender bias by incorporating sense vectors, a more refined version of the bag-of-words approach. Backpack Language Models refer to a model that incorporates sense vectors to enhance its understanding and prediction capabilities. Similar to the bag-of-words method, each word is represented by sense vectors that capture all possible meanings for each word based on the context of their usage. The Backpack model combines these sense vectors to create a representation for each word in a sequence. The weights assigned to the sense vectors are determined by a contextualization function that considers the entire sequence.

This advancement could have very positive implications in content generation and classification.

The Gender Bias Issue

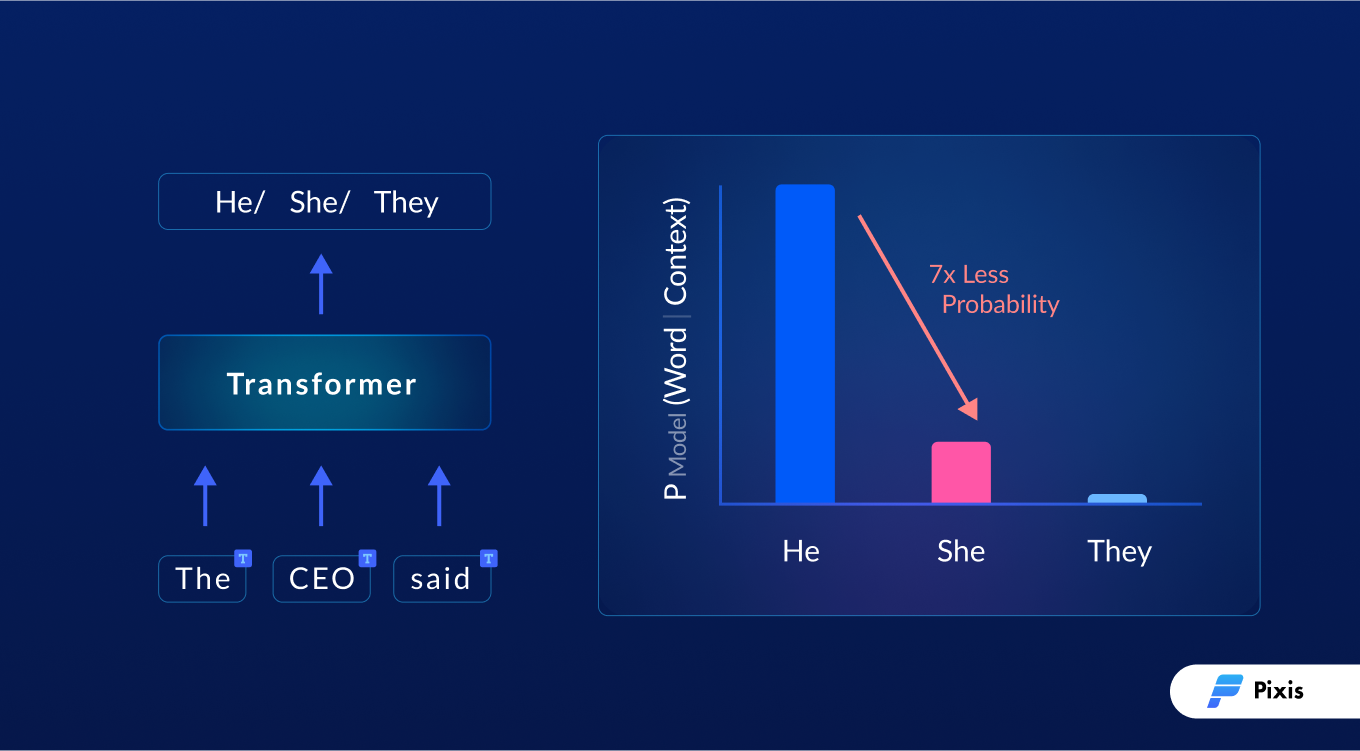

AI models learn from vast amounts of data, which can inadvertently reflect societal biases present in the training data. Gender bias often emerges when AI systems generate text that reinforces stereotypes or exhibits skewed representations. For instance, certain occupations may be associated more frequently with one gender when generating content. This is apparent in text AI models that assume the career term ‘CEO’ would require the ‘he’ pronoun when creating ad copies or job descriptions for employee recruitment, or text-to-image generative tools that favor producing images of males when asked to depict professions such as judge, doctor, politician or lawyer when designing graphics.

This occurs because the AI models have been trained on data that is biased to begin with. It recognizes the term ‘CEO’ and checks for the probability of pronouns associated with it.

Such biases could be removed with some finetuning, and that would be the end of the problem. However, as the context of the phrase changes, so does the probability of pronouns being used. “The CEO said” would not carry the same pronoun predictions as the phrase “The CEO thought”. Therefore, the multitude of combinations, professions, and nouns to finetune would be too vast to finetune. Hence, the need for a model that has improved contextual understanding.

Backpack Language Models For The Win

By leveraging these sense vectors, the Backpack model can make precise and predictable interventions or predictions across various contexts. Essentially, it’s a language model that uses sense vectors to improve its understanding and performance in different situations. This collaborative effort allows for a more nuanced and diverse generation of text to help refrain from making gender associations.

Why Backpack Language Models Are A Big Deal

Embracing Backpack Language Models will allow us to craft more gender-neutral content that cuts through the noise, resonating with the target audience in profound and impactful ways. By explicitly training models on gender-neutral or bias-free datasets, we can mitigate the potential for biased content generation.

Though this promising solution for the gender bias problem in AI language models seems to be most valuable in content generation, it will make progressive strides for several other business functions as well. For one, AI assisted employee recruitment platforms can mitigate the possibilities of skewed results by filtering out candidates based on gender for different job roles. E-commerce platforms can make product recommendations with more weight on more relevant metrics rather than gender. Even data analysis tools can be fine tuned to stop favoring male responses as it has done so in the past.

This could truly prove to be a significant milestone for language processing and generative models to be more accurate and objective.